Because some people won’t just let go. Others are just waiting for the day for that first game to come out that really needs more than 10gb so they can prance about in here saying told you so, nana nana na naaaa... lol.While 100% correct how the fudge is this still being discussed and argued? lol.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10GB vram enough for the 3080? Discuss..

- Thread starter Perfect_Chaos

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsSoldato

- Joined

- 21 Jul 2005

- Posts

- 21,171

- Location

- Officially least sunny location -Ronskistats

Because some people won’t just let go. Others are just waiting for the day for that first game to come out that really needs more than 10gb so they can prance about in here saying told you so, nana nana na naaaa... lol.

Its a bit like the AMD drivers suck retortion. For the main its not an issue but people like to focus and exaggerate that as they think its the Achilles heel of the brand.

Yeah. It is silly. AMD drivers were solid for a very long time, they only messed it up with the 5700 with the black screen crap. I have had loads of AMD GPU’s and they have been just as good with drivers as Nvidia in my experience. It was sad they dropped the ball with the drivers last year as suddenly the amd drivers are crap stuff came back again in a big way.Its a bit like the AMD drivers suck retortion. For the main its not an issue but people like to focus and exaggerate that as they think its the Achilles heel of the brand.

You can’t, it is either 10 or 20.

12 would only work on the bigger bus size on the 3090.

I see well it makes sense to me now 10gb or 20gb which is a waste of ram and would half the stock level in an already low stock enviroment. But that surely falls on whoever designed the card so it can only take 10gb or 20gb. It sounds like an idiotic design decision. I think in 2021 GTAV Enhanced and Cyberpunk 2077 with possibly a Cyperpunk texture pack will really kill 10gb and exceed it. So that screws over people like me suddenly i am not able to turn off Raytracing and everything else apart from the textures and enjoy it. They will want me to upgrade well i already proved with the previous launches i am more than prepared to sit it out and leave them without a sale if they want to be like that.

I will not waste money on a 20gb 3080 i will keep my 2080ti until the 4080? Whatever it is called i will wait.

Because some people won’t just let go. Others are just waiting for the day for that first game to come out that really needs more than 10gb so they can prance about in here saying told you so, nana nana na naaaa... lol.

Is this a tongue in cheek comment or do you serisouly lack this much self awareness?

Were you not the one that bumped this thread with the Cyberpunk 2077 recomended spec sheet when it was 2 days old?

Were you not the one who posted an image about 10GB of VRAM being enough for you, yet still seem to appear in this thread and arguing it as if trying to convince yourself that it is the right choice?

Not only 5700 as I had and still have driver issues with Polaris cards. Had problems with RX 480 when it was released and now having problems with RX 570 plus I really hate that stupid looking full of useless features but lacking basic stuff like refresh rate control panel.Yeah. It is silly. AMD drivers were solid for a very long time, they only messed it up with the 5700 with the black screen crap. I have had loads of AMD GPU’s and they have been just as good with drivers as Nvidia in my experience. It was sad they dropped the ball with the drivers last year as suddenly the amd drivers are crap stuff came back again in a big way.

While 100% correct how the fudge is this still being discussed and argued? lol.

I don't really get all the fuss about 10GB. Turn down the texture setting 1 notch in the event, a game releases with more than 10GB being needed. Even on the 6800 XT, you will definitely be turning down RTX-related settings as compared to 3080. It entirely depends on what one's preferences are, whether you want RTX or textures.

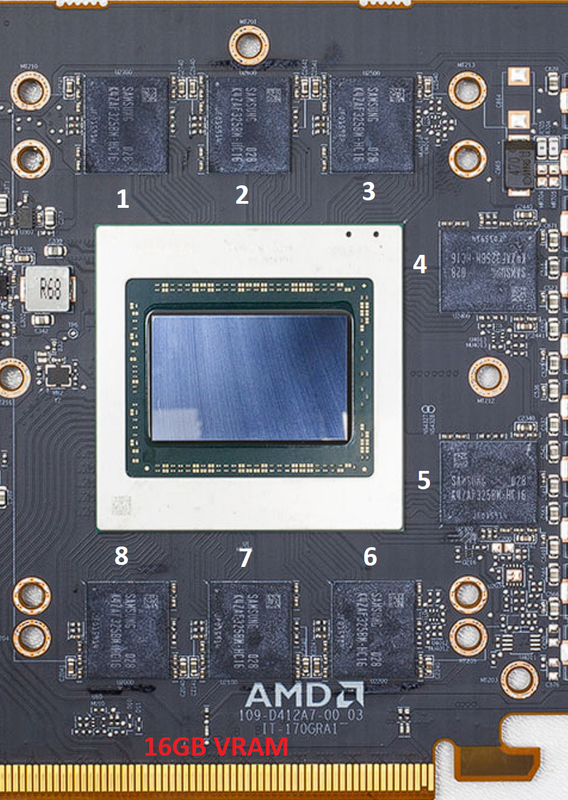

For GDDR6 which the AMD cards use it's about $11.69 per each 1GB modules, so 16GB of that is 187.04

AMD doesn't use 1GB chips but 2GB chips on the RX 6800 series: GDDR6 | Samsung Semiconductor Global Website

AMD Radeon RX 6800 XT Review - NVIDIA is in Trouble | TechPowerUp

RTX 3080 has free space for up to 12GB GDDR6X:

MSI GeForce RTX 3080 Suprim X Review - The Biggest Graphics Card in the World | TechPowerUp

That's the thing the card will run out of Compute power before it runs out of memory, people are still hung up on old memory this Ampere range works differently and I wouldn t mind but some smart chap earlier pointed out we have indeed been measuring memory wrong for years and commit is very different to utilised.I don't really get all the fuss about 10GB. Turn down the texture setting 1 notch in the event, a game releases with more than 10GB being needed. Even on the 6800 XT, you will definitely be turning down RTX-related settings as compared to 3080. It entirely depends on what one's preferences are, whether you want RTX or textures.

Anyway a dog chasing it's own tail will arrive shortly to explain why thats a smart move and to them I say 'ok don't buy the 3080'.

We should now be looking at the smart cache on the 16gb AMD cards as are they bandwidth starved at 4k?

RTX 3080 has free space for up to 12GB GDDR6X:

MSI GeForce RTX 3080 Suprim X Review - The Biggest Graphics Card in the World | TechPowerUp[/QUOTE]

It might be the same PCB as the 3090 and the 3090 might use 12X2 gb GDDR6X

MSI GeForce RTX 3080 Suprim X Review - The Biggest Graphics Card in the World | TechPowerUp[/QUOTE]

It might be the same PCB as the 3090 and the 3090 might use 12X2 gb GDDR6X

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,237

- Location

- Norwich, UK

The 1GB chip size was just an example of a specific size of memory on a chip, I wasn't saying that's what any specific card uses. The point is that when you're limited to multiples of something that's part of the overall restriction. The 3080 shares reference design similarities with the 3090 which uses 12 chips on that side of the PCB (and then 12 on the rear)

The overall point is that these things are all limitations which means you cannot pick an arbitrary amount of RAM to use for any given architecture, you're forced into picking certain vRAM configuration or otherwise forced to change parts of the architecture. The 3800XT for example couldn't just use 15GB instead of 16GB, that's not how this works, it's more complcated than that.

The overall point is that these things are all limitations which means you cannot pick an arbitrary amount of RAM to use for any given architecture, you're forced into picking certain vRAM configuration or otherwise forced to change parts of the architecture. The 3800XT for example couldn't just use 15GB instead of 16GB, that's not how this works, it's more complcated than that.

RTX 3080 has free space for up to 12GB GDDR6X:

MSI GeForce RTX 3080 Suprim X Review - The Biggest Graphics Card in the World | TechPowerUp

It might be the same PCB as the 3090 and the 3090 might use 12X2 gb GDDR6X[/QUOTE]

cause its the same pcb as the 3090

Majority of what I say is tongue in cheek, thought most realised that by nowIs this a tongue in cheek comment or do you serisouly lack this much self awareness?

Were you not the one that bumped this thread with the Cyberpunk 2077 recomended spec sheet when it was 2 days old?

Were you not the one who posted an image about 10GB of VRAM being enough for you, yet still seem to appear in this thread and arguing it as if trying to convince yourself that it is the right choice?

I do not need to convince anyone, no upside for me. I just enjoy the debate and say it as it is

Does it bother you that I think 10gb is enough for me?

Haha

Majority of what I say is tongue in cheek, thought most realised that by now

I do not need to convince anyone, no upside for me. I just enjoy the debate and say it as it is

Does it bother you that I think 10gb is enough for me?

As long as game devs don't feel the need to cater to your gimped card i don't care

.

.Oh but they will. It will do just fine for until hopper is outAs long as game devs don't feel the need to cater to your gimped card i don't care.

Associate

- Joined

- 3 Mar 2015

- Posts

- 385

- Location

- Wokingham

It's about time a GPU manufacturer designed a board with memory expansion ports on it.

I know it's special fast memory and is deeply integrated, but still the industry should have that ambitious target.

I know it's special fast memory and is deeply integrated, but still the industry should have that ambitious target.

As long as game devs don't feel the need to cater to your gimped card i don't care.

I'd imagine the 3060Ti will be the most popular card. It will have 8GB of DDR6. Sure you may get a texture pack that uses more, but so far such texture packs have not been worth the drive space.

It's about time a GPU manufacturer designed a board with memory expansion ports on it.

I know it's special fast memory and is deeply integrated, but still the industry should have that ambitious target.

All things being equal, GPU performance is found lacking before VRAM is an issue. It would also slow performance, add to cost of card and have incredibly expensive upgrades.

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,237

- Location

- Norwich, UK

I see well it makes sense to me now 10gb or 20gb which is a waste of ram and would half the stock level in an already low stock enviroment. But that surely falls on whoever designed the card so it can only take 10gb or 20gb. It sounds like an idiotic design decision. I think in 2021 GTAV Enhanced and Cyberpunk 2077 with possibly a Cyperpunk texture pack will really kill 10gb and exceed it. So that screws over people like me suddenly i am not able to turn off Raytracing and everything else apart from the textures and enjoy it. They will want me to upgrade well i already proved with the previous launches i am more than prepared to sit it out and leave them without a sale if they want to be like that.

I will not waste money on a 20gb 3080 i will keep my 2080ti until the 4080? Whatever it is called i will wait.

The architecture is normally designed to cope with multiple cards at multiple performance levels with multiple variants of the GPU and different memory capacities. It's all a big trade off, you can see that AMD targeted 16GB for example which is greater than 10GB but they've also probably over provisioned their cards, certainly the lower even models but also probably the 6900XT as well, that thing will never use 16GB and have playable frame rates, they could have got away with 12GB or maybe even 10GB, but odds are their architecture would only allow 8GB as the next lowest vRAM config which would not be enough for the 6800XT or 6900XT for sure. So you're paying more for memory you can't use, does that mean it's an idiotic design decisions? No It's a trade off of the architecture, these kind of tradeoffs are made with every architecture on both camps.

Games like CoD Cold War have high resolution texture packs which when installed push the install to 130GB on disk and it runs in 10GB of vRAM just fine even with Ray tracing and all the effects on Ultra, in fact it's a good example of a modern game which is GPU/Compute bottlenecked, you can't run that game maxed out in 4k on a 6800XT for example you get about 20fps, and even on the 3080 with better RT performance you still only get about 40FPS. Yet we're not exceeding 10GB of vRAM. This follows a trend established by most of the very newest AAA titles, that GPU bottlenecks before vRAM does.

- Status

- Not open for further replies.