ohThe 6800xt at one point was nearly 12GB but the 3090 had not gone over 10GB.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10GB vram enough for the 3080? Discuss..

- Thread starter Perfect_Chaos

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsThey do memory compression all the time, afaik, dlss reduces vram usage by 1gb+ in most cases depending on the preset you use, on quality mode it seems to be 1gb and performance dlss reduces by 1gb-1.5gbI assume DLSS would have less of an impact if the tensors are doing memory compression too, unless they're just doing that all the time anyway?

Last edited:

Scale up from consoles or down to consoles. Consoles are not the upper limit of quality.Then it wouldn't run on consoles, and since that's where the bread and butter of most companies is located, it won't be happening any time soon.

Scale up from consoles or down to consoles. Consoles are not the upper limit of quality.

Developers won't expend any more resources on the PC version than they have to. Unfortunate, but this has been the case for many years.

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,224

- Location

- Norwich, UK

generally we didn't in the past? or for new games moving forward?

what if we have moved past the era of copy and paste a tree?

It's not a past/future thing, it's about open world vs closed world. Any specific hardware generation has a certain amount of processing power and storage as resources, and you need to decide how to spend those resources. If you make an open world game you generally spread out those resources across a larger playable area and with closed world games you have smaller spaces but with more detail. Tricks like copy and paste for trees/folliage or a grass texture is done because it's a way to save on resources which aren't that obvious to the player. Each generation the detail of both open and closed worlds get better but the distinction between open vs closed always remains, if you have a larger area then the same amount of assets are spread thinner across it.

I have no idea if I said that but that looks all kinds of wrong. O right the Vram usage in the video.

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,224

- Location

- Norwich, UK

I have no idea if I said that but that looks all kinds of wrong. O right the Vram usage in the video.

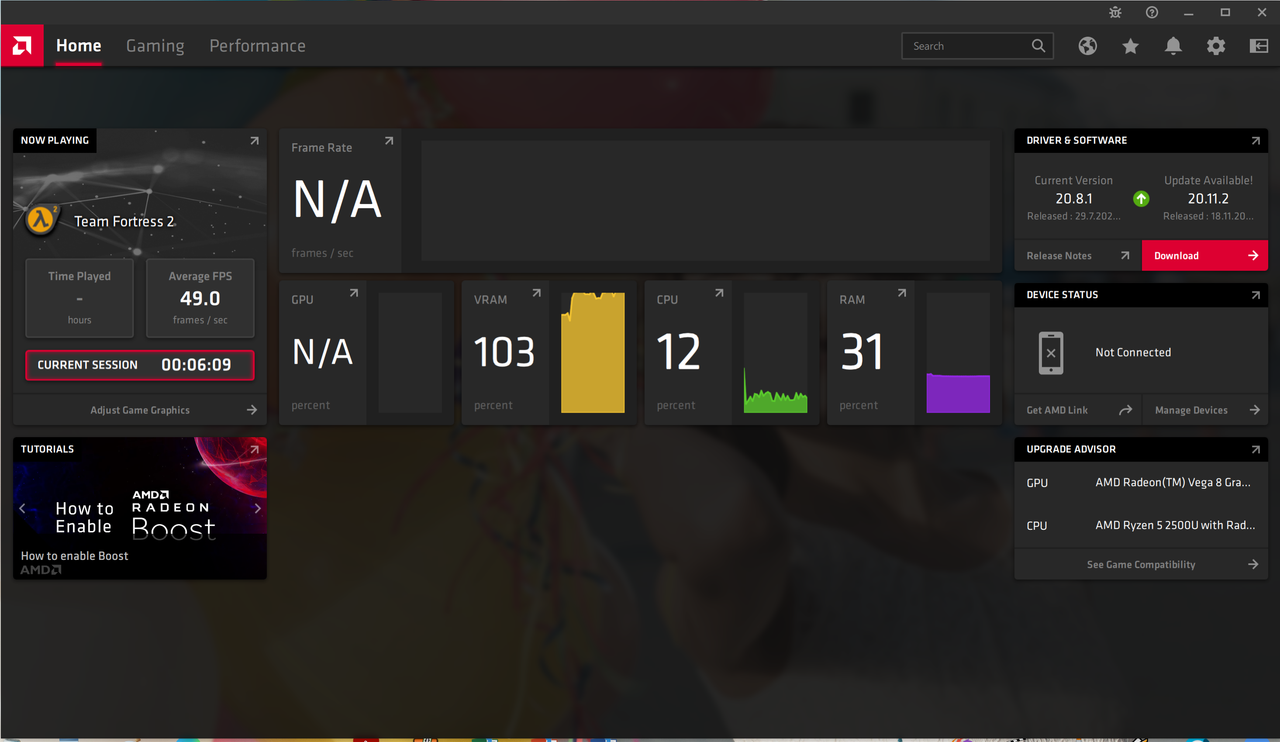

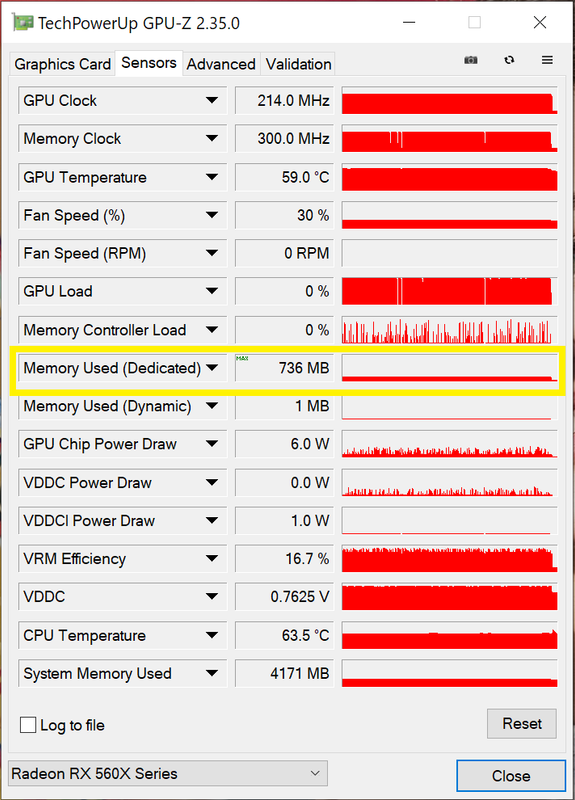

It's not clear what he's measuring there. With the MSI Afterburner Beta you can measure a lot of different things related to vRAM now:

1) Total system usage

2) Shared usage

3) Shared usage per process

4) Dedicated usage

5) Dedicated usage per process

What we're interested in is dedicated usage per process, which is an inspection of process itself (the game) and looking at it's real memory budget. What he's listed on display is Dedicated usage which is always going to be lower than total, but typically not by much, and that's what you see from his numbers. The major difference comes with dedicated usage per process, that's when you stop looking at merely what the game asks for, and instead looks at what it's using, that's when you can get very big differences in allocated vs used.

You see parts of the center of the city in Zero Dawn where the 3090 is winning on frame rate with a lower vRAM budget, the fact that it's a few GB behind actually doesn't make any functional difference it can still provide a higher frame rate, which is another indicator it's not all genuinely in use.

Yes but we are at the point where the base quality (console) of open world will be increasing.It's not a past/future thing, it's about open world vs closed world. Any specific hardware generation has a certain amount of processing power and storage as resources, and you need to decide how to spend those resources. If you make an open world game you generally spread out those resources across a larger playable area and with closed world games you have smaller spaces but with more detail. Tricks like copy and paste for trees/folliage or a grass texture is done because it's a way to save on resources which aren't that obvious to the player. Each generation the detail of both open and closed worlds get better but the distinction between open vs closed always remains, if you have a larger area then the same amount of assets are spread thinner across it.

So no point in having extra ray tracing performance then either since all we will get is what the consoles can do.Developers won't expend any more resources on the PC version than they have to. Unfortunate, but this has been the case for many years.

So no point in having extra ray tracing performance then either since all we will get is what the consoles can do.

Probably. You have to realise that PC gaming itself could be so much more advanced, if these companies weren't also having to think about consoles.

You will see that when games for PS5 comes out your 10gb Vram will go down the drain in a millisecond in 4k and probably 1440p too, simply because stuff needs space, and you can have whatever tech if on screen has to be in vram, and there is little R&D there....

...

Going back on topic, 10gb was just another attempt by Nvidia to double dip and sell you a 20gb version in less than a year, "Super", it is shocking to read adults writing nonsense saying "nvidia said so", how sad of a life one has to be to be gullible to a corporate? And not sure about GDDR6X but non X is about 15 quid a per gb.

...

That was a lot of gum flapping.

PS5 Only has 16GB of RAM total, same as the new Xbox. How much of that do you think will be eaten by the O/S, Housekeeping, Game code and Data, etc. Or to be more exact. How much of that 16GB do you think will be used for graphics?

I intend a 3080 to last me no longer than Hopper/RDNA3 release. It's obvious these new card eat to much power and even on Nvidia, don't have enough RT performance to survive as long as a card like the 1080Ti did. Maybe it will be the people buying 6800/6800xt cards that Nvidia will capture with a 20GB version of the 3080

That was a lot of gum flapping.

PS5 Only has 16GB of RAM total, same as the new Xbox. How much of that do you think will be eaten by the O/S, Housekeeping, Game code and Data, etc. Or to be more exact. How much of that 16GB do you think will be used for graphics?

I intend a 3080 to last me no longer than Hopper/RDNA3 release. It's obvious these new card eat to much power and even on Nvidia, don't have enough RT performance to survive as long as a card like the 1080Ti did. Maybe it will be the people buying 6800/6800xt cards that Nvidia will capture with a 20GB version of the 3080

Instead of the possible 12GB, let's jump straight double to 20GB... Why?

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,224

- Location

- Norwich, UK

So no point in having extra ray tracing performance then either since all we will get is what the consoles can do.

Some features scale with no significant additional work from developers. For example, once you've put in ray tracing for reflections, you can vary the number of rays you shoot to do reflective surfaces. That varies the quality of the result and the performance impact, you can get a cleaner and sharper reflection on a card with more RT acceleration and all you really need to do is give PC gamers the menu option.

That was a lot of gum flapping.

PS5 Only has 16GB of RAM total, same as the new Xbox. How much of that do you think will be eaten by the O/S, Housekeeping, Game code and Data, etc. Or to be more exact. How much of that 16GB do you think will be used for graphics?

I intend a 3080 to last me no longer than Hopper/RDNA3 release. It's obvious these new card eat to much power and even on Nvidia, don't have enough RT performance to survive as long as a card like the 1080Ti did. Maybe it will be the people buying 6800/6800xt cards that Nvidia will capture with a 20GB version of the 3080

You won't need a card to last as long as 1080ti assuming development picks up as well as competition.

Following AMD's launch

Just to clarify, you want whichever GPU you want to last you no longer than the next GPU refresh? In which case surely either of them will do this extremely simple task as in the meantime, I don't see any non-Hopper or non-RNDA3 GPU really beating AMD/NVIDIA offerings ...

Is there not a single game or benchmark available at present which shows 6800XT destroying the 'VRAM-crippled' 3080 in-game? due to the 16GB of VRAM ..

That was a lot of gum flapping.

PS5 Only has 16GB of RAM total, same as the new Xbox. How much of that do you think will be eaten by the O/S, Housekeeping, Game code and Data, etc. Or to be more exact. How much of that 16GB do you think will be used for graphics?

I intend a 3080 to last me no longer than Hopper/RDNA3 release. It's obvious these new card eat to much power and even on Nvidia, don't have enough RT performance to survive as long as a card like the 1080Ti did. Maybe it will be the people buying 6800/6800xt cards that Nvidia will capture with a 20GB version of the 3080

Just to clarify, you want whichever GPU you want to last you no longer than the next GPU refresh? In which case surely either of them will do this extremely simple task as in the meantime, I don't see any non-Hopper or non-RNDA3 GPU really beating AMD/NVIDIA offerings ...

Is there not a single game or benchmark available at present which shows 6800XT destroying the 'VRAM-crippled' 3080 in-game? due to the 16GB of VRAM ..

COD is a good example if you talk to a 16 years old (as online only games typical target) nor is a graphic marvel as the engine is build for people who like to play the same crap over and over, if we think open world think MSFS, as size, or Cyberpunk as texture quality and size, as said previously and as I do when I develop myself if I want to pack 4k textures for small object because I, as developer, want to see quality in my work YOU user need Vram to store my lovely texture which I painted on my models, if I want to offer you details I use maps, including Normal, these take lot of lovely VRAM and offer you lots of details instead of flat looking textures.

You will see that when games for PS5 comes out your 10gb Vram will go down the drain in a millisecond in 4k and probably 1440p too, simply because stuff needs space, and you can have whatever tech if on screen has to be in vram, and there is little R&D there.... we already have:

- Occlusion Culling

- Deferred Rendering

To name two useful to keep textures at bay, yet what you see has to be in the Vram, so 10gb is yet another scam from Nvidia after they tried to scam consumers with 20xx and their outrageous prices which we all saw sold nothing and left the poor consumers with years of no titles to use them for the super premium paid over 10xx until a £500 console came out, embarrassing, now we will see plenty of RTX so once again console leads the way, 2060 equivalent or not there is ton of value there and the average console users funny enough isn't as gullible, consoles would never sell at 1k per piece.

The point is PC Gamers are getting more gullible by the day and sooner or later will kill the platform for gaming, how many people do you think will get a PC with overpriced parts compared instead of a 4k capable consoles? Pure simple basics economics, PCs aren't veblen goods really and the average parent isn't going to spend £700 for a single piece of hardware and going into the second hand market for 2060 (which is a joke of a card by the way) isn't something many would love to do, yet vram there is still too little.

Going back on topic, 10gb was just another attempt by Nvidia to double dip and sell you a 20gb version in less than a year, "Super", it is shocking to read adults writing nonsense saying "nvidia said so", how sad of a life one has to be to be gullible to a corporate? And not sure about GDDR6X but non X is about 15 quid a per gb.

Games will be even more unoptimized on Nvidia cards this gen, I shall keep this here and come back in a whileSurely they were not expecting AMD to hit them, nor anyone did probably, now they offer absolutely zero value they sell only because people are deceivable so easily that is kind of embarrassing.

It seems lots of people want these cards, just seems... as 20xx seemed like god know what then seen numbers they are probably one of Nvidia biggest failure as far as I remember (and that is the Commodore era), isn't just an nvidia problem, AMD seen the poor judgement of PC Gamers are making extra profit by charging only £50 less, and they have ZERO pedigree to do so, at least Nvidia has many series under their belt in the past years, AMD just said "*** them, they are dumb let's charge them as much as our competitor", would not be surprised if they used exact those words, to stay in topic at least they are giving you enough VRAM to last years and probably this time games will run better on their cards, FUTURE GAMES NOT GAMES THAT CAME OUT BEFORE.

I don't see Nvidia cards viable for 4k and barely for 1440p as my 1080ti in games like MSFS is saturated.

I see amd selling 6800 more as despite being a bit over a 3070 offers better performance and above all more Vram

The rest is overpriced garbage, from both AMD and NVIDIA that will sell maybe 200k unit which is nothing for a developer to optimize their games on, even 500k is nothing if put it next to millions of consoles sold with more Vram.

And we talk value vs performance as average, not costs vs other goods such an iPhone (which is a veblen good anyway)...

You are citing MSFS as an example or parameter to measure games from so lets run with this.

How does the 6800 or 6800XT fare in MSFS compare to your 1080ti in 4K? And finally, how does the 3080 do?

If people are saying the 3080 isn't good enough for 4K gaming for the next 2 years, then surely this means the 6800XT isn't fit for purpose too as it seems to be getting beaten at nearly every single benchmark at 4k by the 3080?

You are citing MSFS as an example or parameter to measure games from so lets run with this.

How does the 6800 or 6800XT fare in MSFS compare to your 1080ti in 4K? And finally, how does the 3080 do?

If people are saying the 3080 isn't good enough for 4K gaming for the next 2 years, then surely this means the 6800XT isn't fit for purpose too as it seems to be getting beaten at nearly every single benchmark at 4k by the 3080?

https://www.guru3d.com/articles-pages/amd-radeon-rx-6800-xt-review,20.html

The same can be said for ultra high detailed textures and assets. You create them to the highest detail and scale them backSome features scale with no significant additional work from developers. For example, once you've put in ray tracing for reflections, you can vary the number of rays you shoot to do reflective surfaces. That varies the quality of the result and the performance impact, you can get a cleaner and sharper reflection on a card with more RT acceleration and all you really need to do is give PC gamers the menu option.

Exactly. By using MSFS as his pole to beat GPUs with... the 6800XT in 4k is behind the 3080, so his post demeaning the NVIDIA series of GPUs should extend to the AMD cards too.

- Status

- Not open for further replies.