The 20GB 3080 could be cancelled https://www.techpowerup.com/273637/nvidia-reportedly-cancels-launch-of-rtx-3080-20-gb-rtx-3070-16-gb

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10GB vram enough for the 3080? Discuss..

- Thread starter Perfect_Chaos

- Start date

- Status

- Not open for further replies.

More options

Thread starter's postsCutting their losses with Samsung and moving to TSMC 7nm for the Super/Ti versions I guess. More VRAM, better performance, lower power draw, actual stock...

Maybe they'll even go as far as to call it the 4000 series. Haven't had a whole new series on the same architecture since Kepler on the Nvidia side.

Maybe they'll even go as far as to call it the 4000 series. Haven't had a whole new series on the same architecture since Kepler on the Nvidia side.

Cutting their losses with Samsung and moving to TSMC 7nm for the Super/Ti versions I guess. More VRAM, better performance, lower power draw, actual stock...

Maybe they'll even go as far as to call it the 4000 series. Haven't had a whole new series on the same architecture since Kepler on the Nvidia side.

Will depend on the contract with Samsung. A node shift has happened before. 65nm to 55nm if my memory is still good. GT200a and then GT200b.

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,213

- Location

- Norwich, UK

The move to Samsung was obviously about TSMC and their prices. They refused to drop the 7nm prices for Nvidia, basically counting on Nvidia not having an alternate source to go to, so Nvidia called that bluff and used the cheaper Samsung fab, which lead to TSMC dropping their prices straight away.

On power draw the leaked big Navi numbers look to be revised just recently, and their 3080 equiv will be pulling the same amount of power. So it's not clear to me that TSMC 7nm is significantly better per watt. Time will tell I suppose.

On power draw the leaked big Navi numbers look to be revised just recently, and their 3080 equiv will be pulling the same amount of power. So it's not clear to me that TSMC 7nm is significantly better per watt. Time will tell I suppose.

Yeah if this rumour is true about no 20Gb then it is a bit of a setback for me on 1080ti. Would like to have more Ram than previous model.

Could always go AMD but I am on a G-sync monitor plus was looking forward to playing some RT games like cyberpunk, control.

Could always wait for these 7nm cards but could be another 6 months away.

Could always go AMD but I am on a G-sync monitor plus was looking forward to playing some RT games like cyberpunk, control.

Could always wait for these 7nm cards but could be another 6 months away.

Why not buy the 3090, plenty vram on thatYeah if this rumour is true about no 20Gb then it is a bit of a setback for me on 1080ti. Would like to have more Ram than previous model.

Could always go AMD but I am on a G-sync monitor plus was looking forward to playing some RT games like cyberpunk, control.

Could always wait for these 7nm cards but could be another 6 months away.

The theory I've forwarded is quite simple. The purpose of putting data into vRAM is so the GPU can use that data to construct the next frame, and the more complex the scene the GPU has to render the longer it takes to do it, and thus the frame rate goes down. That is to say you put more data into vRAM and your performance is going to go down because the GPU has more work to do. Then it becomes a simple matter of bottlenecks. Which gives out first? Do you run out of vRAM with performance overhead left, or do you run out of performance with a vRAM overhead left. And the answer to date in the latter. As we load up modern games into 4k Ultra presets we see the GPUs struggling with frame rate, with vRAM usage way below 10Gb.

The major advancement in our understanding with this whole thing also has to do with now having tools to more accurately report vRAM in use, rather than what is allocated, as the 2 values can differ substantially, and typically do differ.

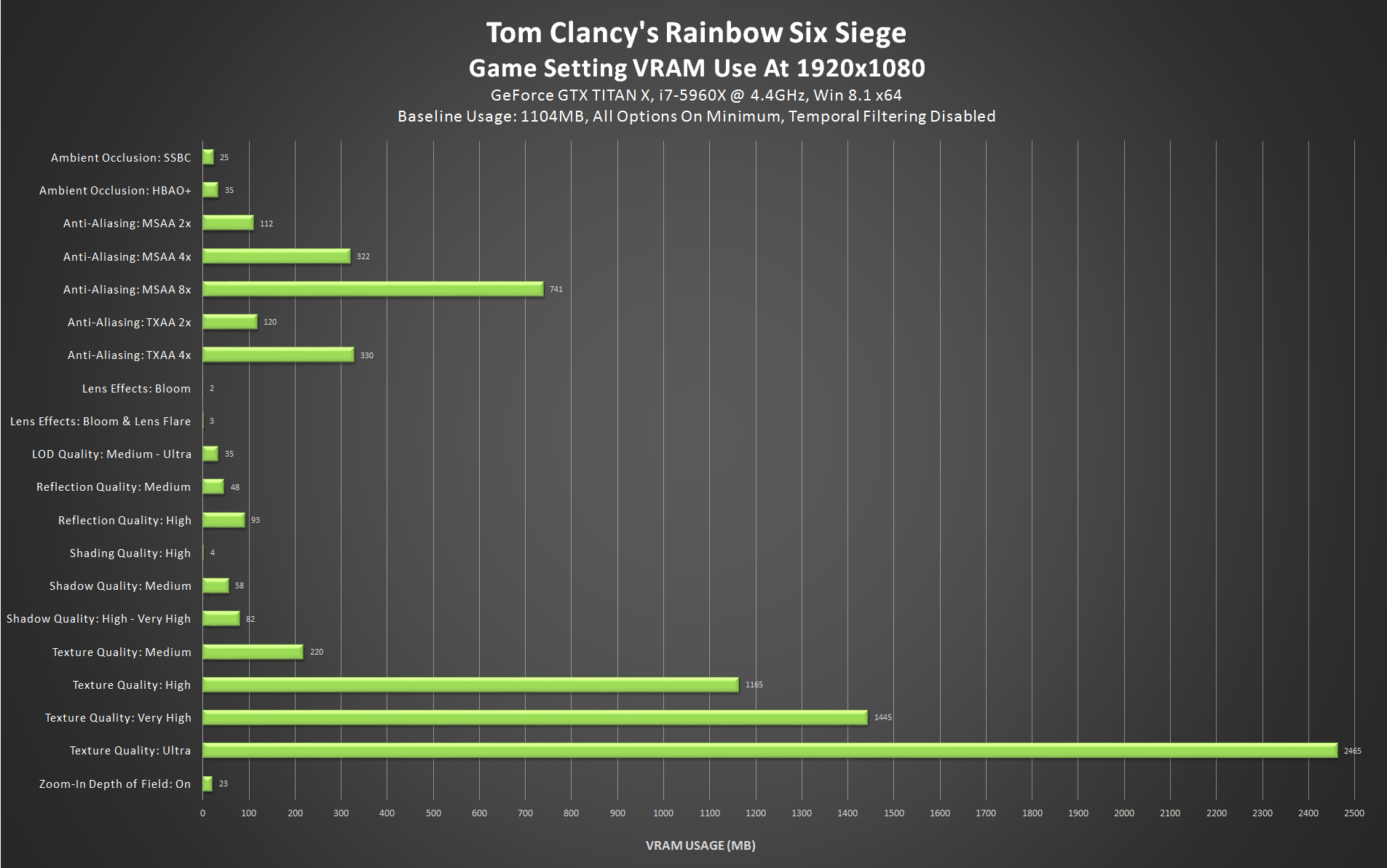

Textures are the biggest users of VRAM. And from the following information have one of the lowest performance penalties.

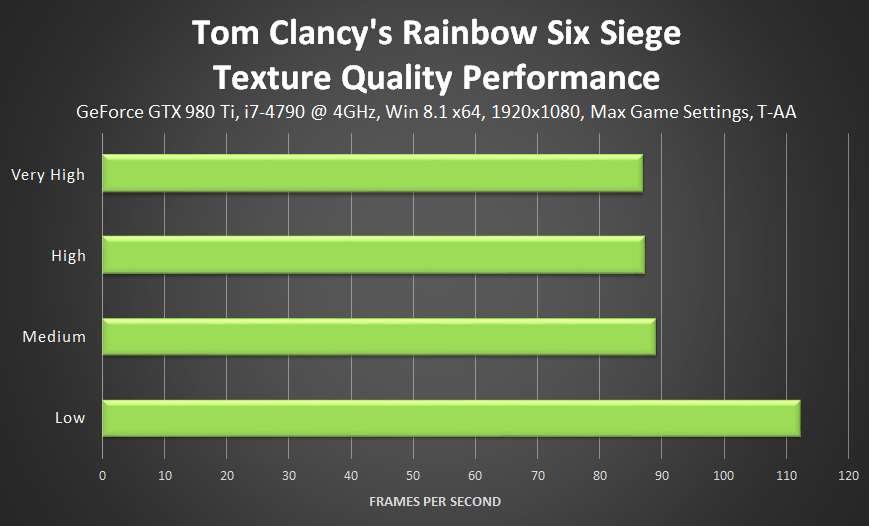

Nvidia's performance guide for Rainbow 6

The content links are broken so use find feature to navigate this page.

https://www.nvidia.com/en-us/geforc...bow-six-siege-graphics-and-performance-guide/

In the majority of games raising the Texture Quality setting has a negligible impact on performance. The difference here comes from Low dialing down the geometric detail of objects, giving a big boost to performance. Excluding Low, the delta between detail levels is just a few frames per second.

Here is a comparison of VRAM usage for the different settings. As we can see texture quality has the biggest impact on VRAM usage and according to Nvidia in a majority of games the smallest performance impact.

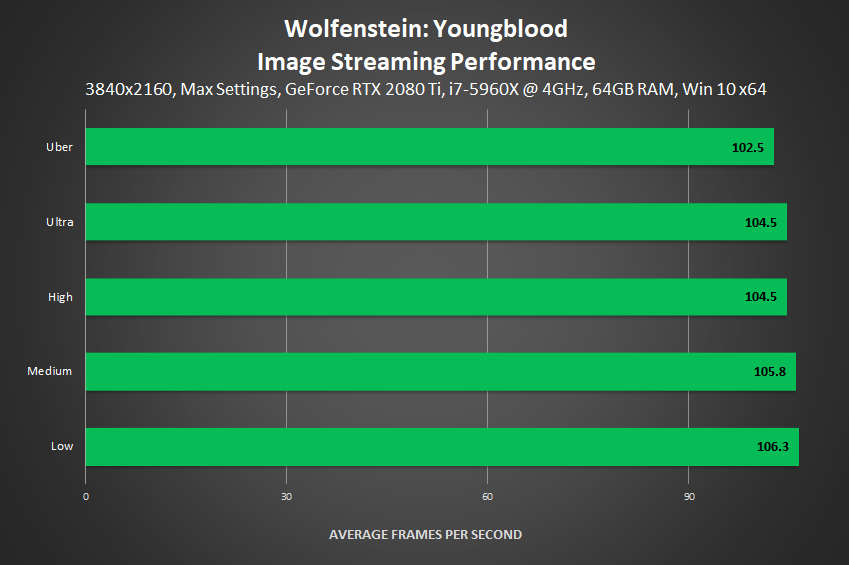

Nvidia's Performance guide for young blood

https://www.nvidia.com/en-us/geforc...guide/#wolfenstein-youngblood-image-streaming

With no other bells and whistles tied to the Image Streaming setting, the level of detail you select should be dictated by your GPU's VRAM. With 8GB or more, everything should be gravy at 4K, though those with 6GB may find they need to drop to Ultra. If you have less than 6GB, we recommend limiting your gaming to Ultra at 1920x1080 or 2560x1440.

As we can see from the graph minimum performance impact. Other settings in the game offer far more performance savings from being lowered.

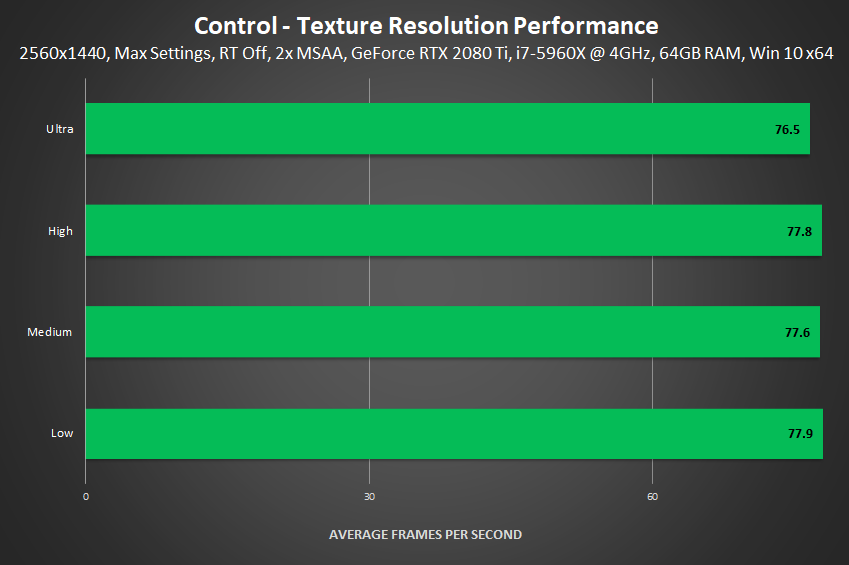

Nvidia's Performance Guide for Control

https://www.nvidia.com/en-us/geforc...performance-guide/#control-texture-resolution

The performance of Low, Medium and High is essentially the same. On Ultra, we suspect there's a few extra mipmaps in use for the better distant texture detail, explaining the slightly lower framerate.

Here is their summary

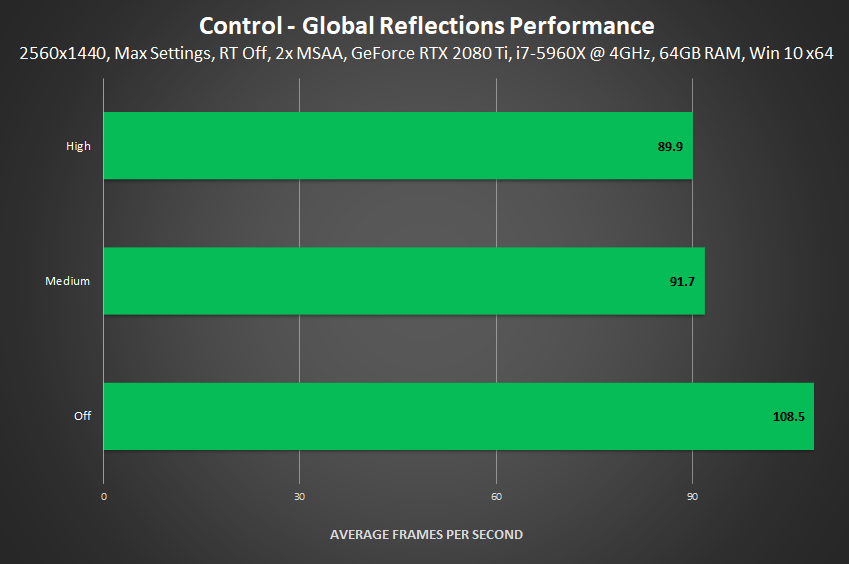

- Global Reflections: Medium instead of High: ~+2 FPS

- MSAA: 2x instead of 4x: +2-4 FPS (or try injecting fast post-process anti-aliasing)

- Screen Space Reflections: Medium instead of High: +9 FPS (if Ray-Traced Reflections is Off)

- Texture Resolution: High instead of Ultra: ~+2 FPS

- Volumetric Lighting: Medium instead of High: +9-11 FPS

Texture resolution has the lowest performance gains from being turned down in this game.

Global reflections for comparison

Shadow of the tomb raider (I can't be bothered quoting you can go take a look)

https://www.nvidia.com/en-us/geforce/news/shadow-of-the-tomb-raider-graphics-and-performance-guide/

When textures are thrown into the mix you are not guarenteed to run out of horsepower before you run out of VRAM.

Associate

- Joined

- 4 Nov 2015

- Posts

- 250

Textures and performance is an interesting read if you're into graphics programming. Essentially the more filtering you have for a texture (linear, bilinear, trilinear) the more reads from texture memory the GPU will perform before eventually producing a colour for a given pixel. Mipmaps (size-reduced copies of textures in GPU memory) can speed this up as smaller textures can be located in lower-level cache. It's all mostly the same performance now, fortunately, as GPUs are enormously parallel as we know. From what I remember reading, the memory usage increase for mipmaps for a given texture is about a third, and you really want as many textures with mipmaps in memory as possible.

I'm very curious about how AMD's "Infinity Cache" affects this beyond the size of the cache itself.

I'm very curious about how AMD's "Infinity Cache" affects this beyond the size of the cache itself.

Associate

- Joined

- 1 Oct 2009

- Posts

- 1,213

- Location

- Norwich, UK

Textures are the biggest users of VRAM. And from the following information have one of the lowest performance penalties.

When textures are thrown into the mix you are not guarenteed to run out of horsepower before you run out of VRAM.

That depends on a few things, many of the other settings that eat up vRAM also use a different amount depending on things like screen resolution, if you have a shader effect that writes out to a buffer in memory then as you increase screen resolution that buffer size increases, where as with textures it does not, they're a sort of a 1 off fixed cost. It's broadly true that different visual features have different impact on vRAM and also different impact on frame rate, which is why there's always room for certain games to be edge cases to what is being said here.

Regarding Wolfenstein in your analysis, you should note I replied to other people in detail about Wolfenstein 2 a few pages back, and they both use the same engine, id Tech 6. These games have dynamically streamed textures and setting the texture quality level actually just sets the vRAM pool size used to swap in and out textures. Which means it can be more lazy about flushing out unnecessary texture and can load in higher quality assets to replace low quality ones in close proximity to you. However side by side comparisons of people who had manually tweaked this value to be custom and go higher than the presets (people who incorrectly labeled this as "8k textures" and "16k textures") found basically no visible difference, primarily because the base texture resolution is the same, the engine is just more lazy about flushing out unnecessary textures at certain distances from view.

It also revealed that measurement of the vRAM budgets in these games without the proper tools is simply inaccurate, that measurements made prior to very recently were done with mem alloc and not memory in use, or better yet memory in use that put to "useful" use (something that makes a visual difference)

So yes the internal behaviour of the vRAM is very complex and nuanced but tends to average out across games, budgets in vRAM for certain things tend to remain similar between games. This is why simply testing the criteria is better, just take lots of games, crank up the settings to 4k ultra and see what fails first, the GPU or the vRAM. And when doing this for a 3080 it's the GPU that goes first.

Textures are the biggest users of VRAM. And from the following information have one of the lowest performance penalties.

...

When textures are thrown into the mix you are not guarenteed to run out of horsepower before you run out of VRAM.

Just using higher res textures is not dissimilar to putting lipstick on a pig.

What provides the knock out look is much higher detail models, far better fluid dynamics and of course RT. These are the performance munching effects that we will see going forwards, that will make us want to upgrade.

I'm not advocating Texture > Everything. I didn't say turn off every other effect to maintain 4k textures.Just using higher res textures is not dissimilar to putting lipstick on a pig.

What provides the knock out look is much higher detail models, far better fluid dynamics and of course RT. These are the performance munching effects that we will see going forwards, that will make us want to upgrade.

Also your analogy doesn't work in this instance. Its not even close to relevant. Good textures are important to raising the final quality of an image.

But for a "knock out" image, you need a combination of good textures, "highly" detailed models (Lets be honest most of that detail for games is in the normal maps and displacement maps) and good lighting (you could also throw in particle effects); Any area that is lacking will bring down the whole final image. The closest thing to a "knock out" effect would be lighting.

I'm not advocating Texture > Everything. I didn't say turn off every other effect to maintain 4k textures.

Also your analogy doesn't work in this instance. Its not even close to relevant. Good textures are important to raising the final quality of an image.

But for a "knock out" image, you need a combination of good textures, "highly" detailed models (Lets be honest most of that detail for games is in the normal maps and displacement maps) and good lighting (you could also throw in particle effects); Any area that is lacking will bring down the whole final image. The closest thing to a "knock out" effect would be lighting.

I think we already have good enough textures. What we need now is far better rendering quality with more dynamics in the scene. We need better performing GPUs before more VRAM.

I think we already have good enough textures. What we need now is far better rendering quality with more dynamics in the scene. We need better performing GPUs before more VRAM.

Real limitation at the moment is the CPU, puts a lot of restrictions on what you can do with physics/ai.

Real limitation at the moment is the CPU, puts a lot of restrictions on what you can do with physics/ai.

There have been suggestions of Nvidia putting a CPU on their GPUs in future, something that is bound to happen. I'd like to see physics move back to the GPU again, but with an open standard. With AI we already have a start with Tensor cores, but again needs to be a more open to succeed.

There have been suggestions of Nvidia putting a CPU on their GPUs in future, something that is bound to happen. I'd like to see physics move back to the GPU again, but with an open standard. With AI we already have a start with Tensor cores, but again needs to be a more open to succeed.

Usually Nvidia does a closed implementation first and then Microsoft adds an equivalent in the DirectX API and then AMD supports it years later, like with RTX/DLSS etc.

If they add a CPU I suspect they will market it under the PhyX brand.

Associate

- Joined

- 4 Nov 2015

- Posts

- 250

I imagine most 20GB holdouts are also AMD holdouts now, it would make sense given the lack of Nvidia stock.I wouldn't want to be one of those 20GB holdouts if the news is true, back of the queue means a long wait, also suggests Nvidia knows AMD won't be competitive vs 3080/3090.

I imagine most 20GB holdouts are also AMD holdouts now, it would make sense given the lack of Nvidia stock.

I am presuming Nvidia knows the card in regards to raw performance is inferior to the 3080, I suppose that would be ok if you just needed 16/20GB for professional stuff.

The move to Samsung was obviously about TSMC and their prices. They refused to drop the 7nm prices for Nvidia, basically counting on Nvidia not having an alternate source to go to, so Nvidia called that bluff and used the cheaper Samsung fab, which lead to TSMC dropping their prices straight away.

On power draw the leaked big Navi numbers look to be revised just recently, and their 3080 equiv will be pulling the same amount of power. So it's not clear to me that TSMC 7nm is significantly better per watt. Time will tell I suppose.

My understanding is the 7nm currently is not a true 7nm and more a iteration on the 8nm process, AMD's roadmap is to drop 2021 5nm and why Nvidia is actually on the back foot and knee jerked the 3080 launch, failed 10GB now canceled the 20gb realising it wont improve market position for the extra cost and have moved to market the counter punch with Ti/Super 7nm early 2021, what worries Nvidia most I think is in fact the 5nm launch AMD have planned for 2021 as right now Nvidia are behind.

This really does seem like an Intel/AMD situation, where Nvidia sat back for half a second and got caught napping.

- Status

- Not open for further replies.