No doubt even if it does run fine (and on everyone's system), they'll ignore all that and go "zOMG but the requirements state otherwise!!!!"

I was just thinking there, I wonder if amd could maybe make a "VRAM optimiser" (like their ray tracing analyser) given how they guzzle on vram compared to nvidia gpus

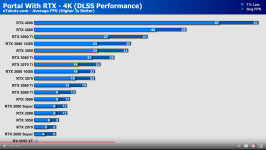

Nor the fact that apparently a 6800xt will provide an equivalent experience to a 4080? Or how about the 3070 only being good enough for 1440p 30 fps....

LOL at the portal one too needing 16gb vram to be playable....

Even though every gpu needs to either use DLSS performance or ultra performance (reducing the vram usage) or/and FG with adjustment in settings in order to get 60+ FPS i.e. in which case, gpus with <16gb vram get a perfectly playable experience, well except for amd gpus

Don't worry about what makes sense. The narrative must be maintained at all costs