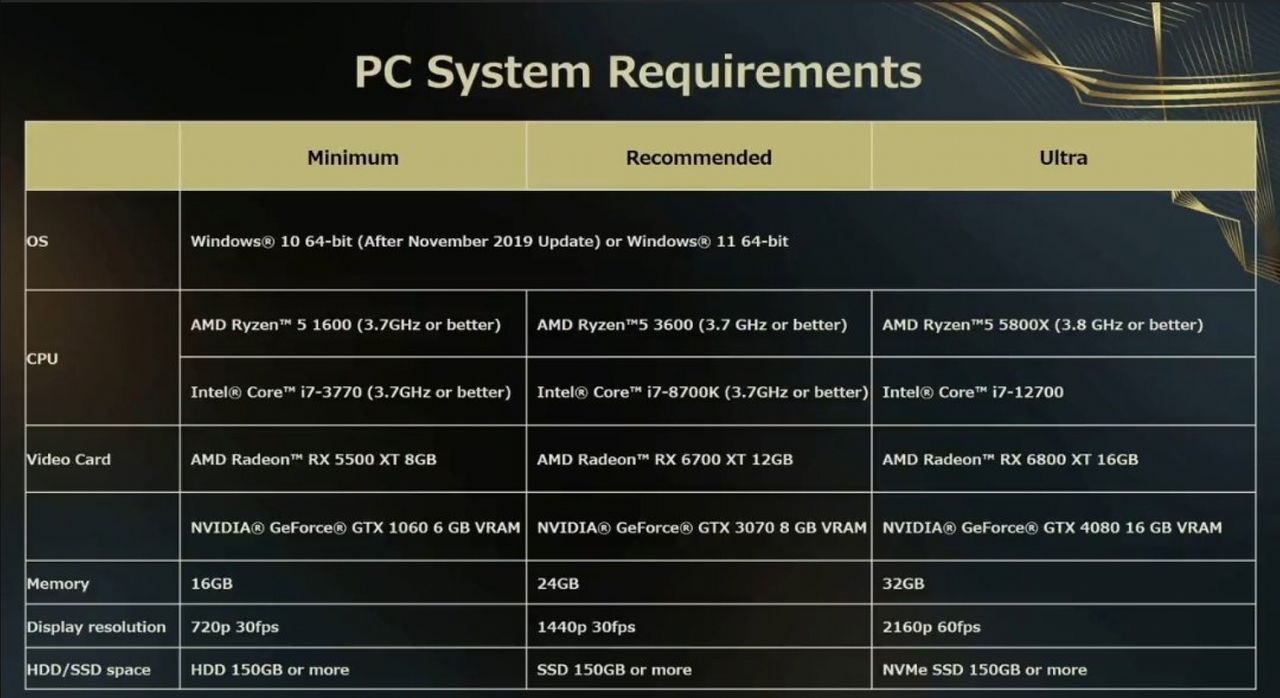

So the game doesnt state anywhere that it requires 16 gb of vram, does it?

This is the mentaility of some people in these VRAM threads and these people have the cheek to accuse others of being disingenuous.Drop settings one notch? Not allowed to do that in these parts. A GPU either runs a game maximum settings or does not run it at all!

According to a few in here anyway

Last edited: