but is that even theoretically possible?

here we are comparing dropping textures one notch and the inability to properly experience ray tracing?

isnt the choice clear.. like simple choice between life and death

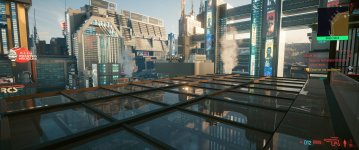

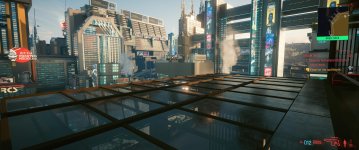

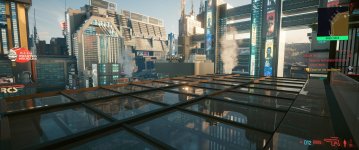

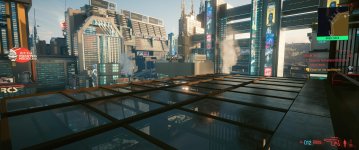

It is. Some games have a variety of RT presets e.g. cp 2077, metro ee and I'm sure there are a good few more with presets for RT but most of them it is just on or off.

I'll personally always sacrifice other settings or/and use a higher preset of dlss (not fsr though as it is **** for lower presets) in order to keep RT maxed out as it makes a far bigger difference to "overall" IQ

And 3080's...

3080s are still kicking ass when it comes to RT, same definetly can not be said for rdna 2 with the exception of maybe a 6950xt

) is either bad optimisation or intentional sabotage, take your pick

) is either bad optimisation or intentional sabotage, take your pick