Soldato

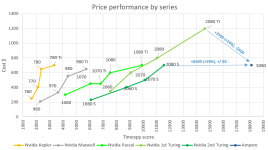

I mean... at the very least it looks like AMD are going to bring out a faster card than the 2080ti with probably an MSRP closer to half the 2080ti so 2080ti owners are gonna get shafted either way... it has been that way more often than not in GPU generation changes so I don't have much sympathy.

2080Ti owners were screwed the day they bought their cards. They got the same generational improvement as usual but paid almost double to get it.