-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectX 12 Showcase New Features and Benefits of D3D12 API

- Thread starter AthlonXP1800

- Start date

More options

Thread starter's postsThe real question is, will we get games making full use of this? Or will we have to wait for the consoles to play catch up before we get anything.

Ashes of the Singularity seams to be one of the first. It's from the guys that made Star Swarm.

Soldato

- Joined

- 14 Dec 2013

- Posts

- 2,590

That is not the game performance though so it doesn't tell that much

True, I will have to wait and see how it translates to actual games as and when they are released.

Soldato

- Joined

- 25 Nov 2011

- Posts

- 20,680

- Location

- The KOP

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,487

Consoles are 8 core machines now, so hopefully more cores are going to be used in games.

Both DX12 and Mantle give a good boost to low end spec systems too which will prove popular.

And about 6 available to developers, pretty much what we see in DX12 now and probably go beyond that with 12.x when the need arises.

Soldato

- Joined

- 18 May 2010

- Posts

- 23,914

- Location

- London

Soldato

- Joined

- 2 Jan 2012

- Posts

- 12,453

- Location

- UK.

GTX 750 Ti:

DX11 multi-thread: 1,081,530 draw calls per second

DX12: 7,653,465 draw calls per second

Draw Call/Second Increase: 600%

GTX Titan X:

DX11 multi-thread: 2,524,794 draw calls per second

DX12: 14,545,096 draw calls per second

Draw Call/Second Increase: 476%

Intel IRIS Pro (4770R):

DX11 multi-thread: 645,940 draw calls per second

DX12: 2,126150 draw calls per second

Draw Call/Second Increase: 229%

http://www.forbes.com/sites/jasonev...ments/?utm_campaign=yahootix&partner=yahootix

DX11 multi-thread: 1,081,530 draw calls per second

DX12: 7,653,465 draw calls per second

Draw Call/Second Increase: 600%

GTX Titan X:

DX11 multi-thread: 2,524,794 draw calls per second

DX12: 14,545,096 draw calls per second

Draw Call/Second Increase: 476%

Intel IRIS Pro (4770R):

DX11 multi-thread: 645,940 draw calls per second

DX12: 2,126150 draw calls per second

Draw Call/Second Increase: 229%

http://www.forbes.com/sites/jasonev...ments/?utm_campaign=yahootix&partner=yahootix

Why? Draw calls only matter if the game actually requires that many. Current games (outside of Staw Swarm which isn't a game) don't even come close to that. There is no point in more cores if there is no demand for it, also will AMD 8 core CPU's outperform Intel 4 core? If not, I don't see any reason for Intel to actually change anything. At least not anytime soon.

You would be suprised at how many loops developers have to jump through to minimise the number of draw calls they need, when with a low abstraction api like DX12, Vulkan, Mantle etc. They can easily and directly code what they want, instead of having to bundle so many objects into a single command, just so they can skirt under the limitations of directx.

EDIT: The above point is also not a matter of optimisation, it's a matter of having to work within a severe and artificial limitation due to the system being used. Making fewer draw calls also means your game starts to lose its ability to render a large number of unique objects in a scene, due to some of the workarounds they use.

Even the CPU's in the PS3 and Xbox 360 were capable of pushing far more Draw calls than a high end I7 with DX at the Time.

It has already been shown with BF4, although it is optimised for DX11, when playing a multiplayer game, this bumps up the CPU load and the number of draw calls significantly. Typically with single player, since it is a far more controlled environment, performance is kept optimal by doing a lot of pre baking and limiting the number of unique objects in any one scene / area, this helps them keep performance high, but with multiplayer, this is thrown out the window. In areas that were complex already, as more unique objects and players appear in those areas, performance degrades due to the draw call limitations, many games that were released in the past (GTA III, Crisis, etc.) could and should have had far superior performance to what they have, if only we had a low abstraction API at the time.

Even CDPR are considering adding DX12 to Witcher III in the future. Games like GTA V, although the pc and xone/ps4 version has far higher density traffic etc, with DX12 on a pc, it could have dense traffic, tons of foliage, tons of NPS's walking the streets and still breeze along at a nice and smooth FPS, all while having far more light sources in the game, making the scene even better.

Purely DX12 and Vulkan games will start to become far more Interactive, Diverse and have many more unique objects on screen at once.

Last edited:

Soldato

- Joined

- 19 Feb 2011

- Posts

- 5,849

I want a DX12 MMO, jeebus can you imagine a DX12 version of DAOC on a modern engine? the PVP sieges would be insanely epic

So since DX12 is scaling very well to at least 6 cores, has anyone tested an AMD FX-8350 vs mainstream Intel CPU's (e.g. i7 4770k?).

It'd be interesting to see if DX11 was holding the FX CPU's back somewhat. I always thought AMD jumped the gun with low single-thread, high multi-thread performance, instead of Intel's more balanced approach.

It'd be interesting to see if DX11 was holding the FX CPU's back somewhat. I always thought AMD jumped the gun with low single-thread, high multi-thread performance, instead of Intel's more balanced approach.

Finishing installing windows 10 and updating to latest build , wacking drivers on etc the. Will fire up 3dmark ... Just out of curiosity , I know we ideally shouldn't be comparing systems using this but I'd like to see a thread up for those that want to post as I plan to when I've ran the tests

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,487

So since DX12 is scaling very well to at least 6 cores, has anyone tested an AMD FX-8350 vs mainstream Intel CPU's (e.g. i7 4770k?).

It'd be interesting to see if DX11 was holding the FX CPU's back somewhat. I always thought AMD jumped the gun with low single-thread, high multi-thread performance, instead of Intel's more balanced approach.

Different sources, but the numbers I suppose should be close to that.

From the AMD blog, the system should be - AMD FX-8350, AMD Radeon™ R9 290X

http://community.amd.com/community/...mance-in-new-3dmark-api-overhead-feature-test

http://www.pcworld.com/article/2900814/tested-directx-12s-potential-performance-leap-is-insane.html

Last edited:

Interesting, so at least in draw calls the FX chips match (or possibly marginally exceed) the mainstream Intel cores.

Not like I'll downgrade since I'm running a 3770k at 4.2 GHz at the moment, but interesting for competition's sake.

Not like I'll downgrade since I'm running a 3770k at 4.2 GHz at the moment, but interesting for competition's sake.

Soldato

- Joined

- 19 Feb 2011

- Posts

- 5,849

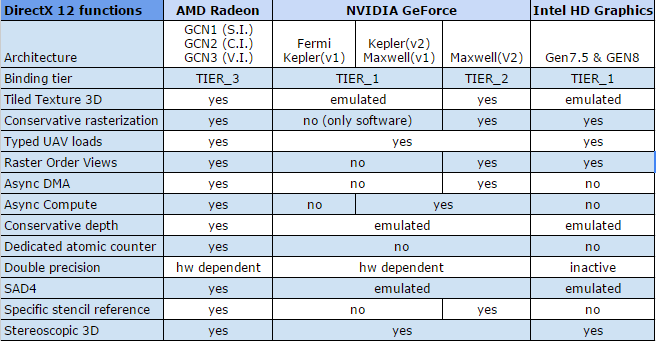

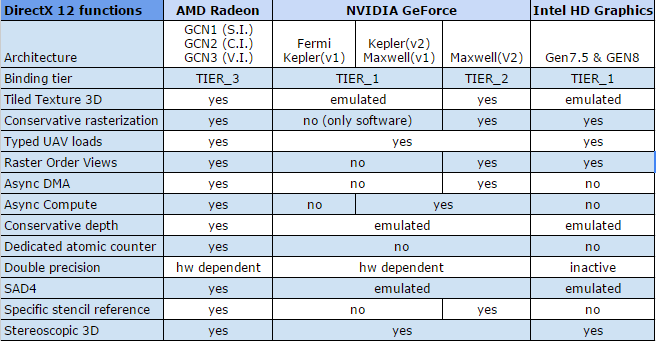

Thanks matt, any word from AMD confirming DX12 Tier support in regards to what we can expect with supported/non supported DX12 features on current GCN gpu's?

So since DX12 is scaling very well to at least 6 cores, has anyone tested an AMD FX-8350 vs mainstream Intel CPU's (e.g. i7 4770k?).

It'd be interesting to see if DX11 was holding the FX CPU's back somewhat. I always thought AMD jumped the gun with low single-thread, high multi-thread performance, instead of Intel's more balanced approach.

http://forums.overclockers.co.uk/showthread.php?t=18663220

I found this... http://forum.jogos.uol.com.br/lista...o-dx12-nvidia-deu-zinca-demais-aqui_t_3402386

Nvidia said they have been a DX12 Development partner with Microsoft for 4 years.

Why is it then that according to this Kepler only has Tier 1 DX12 Support and Maxwell only Tier 2?

Nvidia said they have been a DX12 Development partner with Microsoft for 4 years.

Why is it then that according to this Kepler only has Tier 1 DX12 Support and Maxwell only Tier 2?