Watched about 5 minutes. That guy sounds sensible to me. A rare thing online these days with so many people taking sides.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

DLSS Momentum Continues: 50 Released and Upcoming DLSS 3 Games, Over 250 DLSS Games and Creative Apps Available Now

- Thread starter Nexus18

- Start date

More options

Thread starter's postsYep, he's repeating what DF said, but its a good point.

This is what frametimes look like with FSR3 in Avatar

The whole point of VRR/Freesync/Gsync is that the line on the right side should be pretty flat and smooth but with FRS3 FG it's not and you feel it, the frametime spikes get worse the harder the GPU is working and is less noticeable when the GPU is not working hard

Last edited:

More catching on to how great DLDSR is

More catching on to how great DLDSR is

Worth a click or two to enable wouldn't you say?

Worth a click or two to enable wouldn't you say?

Yeah it's crazy how much better it looks than native/dlss quality. Such an underrated feature by nvidia.

Yeah it's crazy how much better it looks than native/dlss quality. Such an underrated feature by nvidia.

Now you are seeing what I have been saying all along about 1440p being potato and 4K being so much better. DLDSR is what made this monitor's archaic resolution bearable for me. I use it whenever I can in games

Now you are seeing what I have been saying all along about 1440p being potato and 4K being so much better. DLDSR is what made this monitor's archaic resolution bearable for me. I use it whenever I can in games

I wouldn't say 1440p is potato, still looks great, actually think better than my 4k 55" and when with DLDSR, miles better than the 4k 55" oled

Also, 4k was absolutely naff back when it first came about as no games had high resolution assets/textures, all it was good for was anti aliasing. It's only in the last 3 years, it's become more noticeable especially because of TAA adoption where high res works/looks better with this TAA method, which is why DLDSR and DLSS performance works so well.

Last edited:

I wouldn't say 1440p is potato, still looks great, actually think better than my 4k 55" and when with DLDSR, miles better than the 4k 55" oled

Also, 4k was absolutely naff back when it first came about as no games had high resolution assets/textures, all it was good for was anti aliasing. It's only in the last 3 years, it's become more noticeable especially because of TAA adoption where high res works/looks better with this TAA method, which is why DLDSR and DLSS performance works so well.

Potatoe. Nuff said

To me anyway. That was my resolution like 10-15 years ago. I think I sold my 30" Dell 2560x1600 monitor on members market in like 2009 man..

I saw a huge difference in 4K from day 1 in 2014, assets or no. Now it is day and night, but some still can't see it

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,279

Last I've tested in CB77, while the FPS is similar, latency is higher while downsampling, so is not a clear cut win. At least it wasn't in that case.Yeah it's crazy how much better it looks than native/dlss quality. Such an underrated feature by nvidia.

Last I've tested in CB77, while the FPS is similar, latency is higher while downsampling, so is not a clear cut win. At least it wasn't in that case.

Can't say I have noticed it tbh, will check again at some point.

For those who don't want to use dlss tweaks, you can switch to 2.5.1 dlss to get rid of the ghosting in avatar.

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,279

Can't say I have noticed it tbh, will check again at some point.

It will probably be different per scene/game/card. I was looking at the latency as per nVIDIA OSD.

4090 will be less affected by this, of course. Higher latency doesn't mean is unplayable, just that is there.

It took a while to make this video , many frames had to be manually separated and my editing program crashed multiple times splitting the originals raw video of over 11 minutes turned into about 40,000 individual framesThe Intent of this video was to see how would a playback of only generated frames look.and now that we have FSR 3 how will it stack up against DLSS Frame Gen

Last edited:

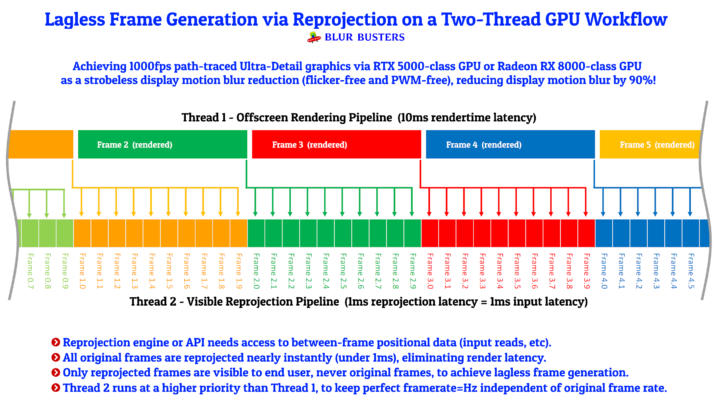

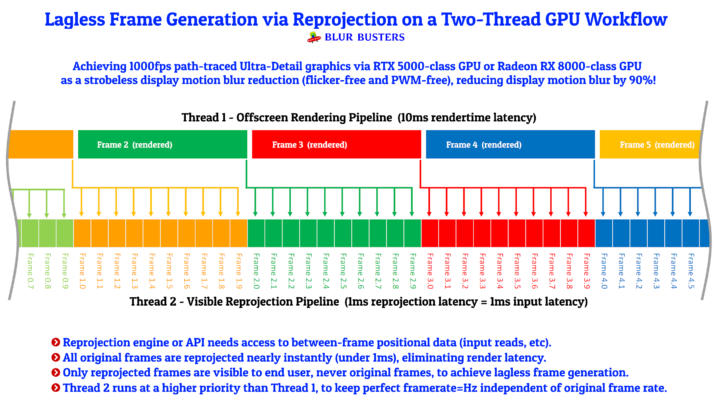

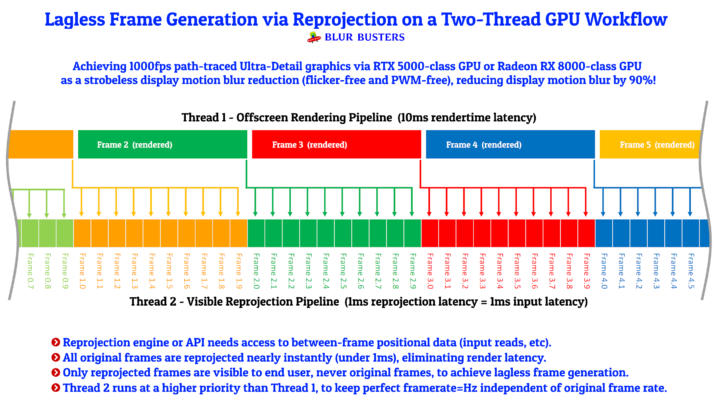

Good read this:

blurbusters.com

blurbusters.com

Frame Generation Essentials: Interpolation, Extrapolation, and Reprojection - Blur Busters

Foreword by Mark Rejhon of Blur Busters: This article is co-written with a new guest writer, William Sokol Erhard, with some editing and section addendums by Mark Rejhon. There will be additional pieces coming out. Let's welcome William aboard! Introduction Advanced image temporal upscaling and...

Yesterday, “fake frames” was meant to refer to classical black-box TV interpolation. It is funny how the mainstream calls them “fake frames”;

But, truth to be told, GPU’s are currently metaphorically “faking” photorealistic scenes via drawing polygons/triangles, textures, and shaders. Reprojection-based workflows is just another method of “faking” frames, much like an MPEG/H.26X video standard of “faking it” via I-Frames, B-Frames and P-Frames.

That’s why, during a bit of data loss, video goes “kablooey” and turns into garbage with artifacts — if a mere 1 bit gets corrupt in a predicted/interpolated frame in a MPEGx/H26x video stream. Until the next full non-predicted/interpolated frame comes in (1-2 seconds later).

Over the long-term, 3D rendering is transitioning to a multitiered workflow too (just like digital video did over 30 years ago out of sheer necessity of bandwidth budgets). Now our sheer necessity is a Moore’s Law slowdown bottleneck. So, as a shortcut around Moore’s Law — we are unable to get much extra performance via traditional “faking-it-via-polygons” methods.

The litmus test is going lagless and artifactless, much like the various interpolated frame subtypes built into your streaming habits, Netflix, Disney, Blu-Ray, E-Cinema, and other current video compression standards that use prediction systems in their compression systems.

Just as compressors have original knowledge of the original material, modern GPU reprojection can gain knowledge via z-buffers and between-frame inputreads. And “fake it” perceptually flawlessly, unlike year 1993’s artifacty MPEG1. Even the reprojection-based double-image artifacts disappear too!

TL;DR: Faking frames isn’t bad anymore if you remove the “black box” factor, and make it perceptually lagless and lossless relative to other methods of “faking frames” like drawing triangles and textures

Last edited:

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,279

Amazing how one method of doubling or even more your performance (multi GPU) is left out still.Good read this:

Frame Generation Essentials: Interpolation, Extrapolation, and Reprojection - Blur Busters

Foreword by Mark Rejhon of Blur Busters: This article is co-written with a new guest writer, William Sokol Erhard, with some editing and section addendums by Mark Rejhon. There will be additional pieces coming out. Let's welcome William aboard! Introduction Advanced image temporal upscaling and...blurbusters.com

Soldato

- Joined

- 19 Feb 2007

- Posts

- 15,010

- Location

- Area 18, ArcCorp

Apparently Star Citizen in a few months is getting DLSS, Should nicely help.

TPU voted DLSS 3/FG as the best gaming technology of the year:

www.techpowerup.com

www.techpowerup.com

TechPowerUp Best of 2023 - The Best in Hardware & Gaming this Year, Ranked

As we look back at 2023, we unveil our picks for the best in PC hardware and gaming technology. Join us as we explore our favorite choices across various components that define the contemporary gaming PC, accompanied by the cutting-edge software that powers these systems.

NVIDIA DLSS 3 Frame Generation was announced late last year along with the GeForce RTX 40-series "Ada" graphics cards, to some skepticism, since we'd seen interpolation techniques before, and knew it to be a lazy way to increase frame-rates, especially in a dynamic use-case like gaming, with drastic changes to the scene, which can cause ghosting. NVIDIA debuted its RTX 40-series last year with only its most premium RTX 4090 and RTX 4080 SKUs, which didn't really need Frame Generation given their product category. A lot has changed over the course of 2023, and we've seen DLSS 3 become increasingly relevant, especially in some of the lower-priced GPU SKUs, such as the RTX 4060 Ti, or even the RTX 4070. NVIDIA spent the year trying to polish the technology along with game developers, to ensure the most obvious holdouts to this technology—ghosting, is reduced, as is its whole-system latency impact, despite Reflex being engaged by default. We've had a chance to enjoy DLSS 3 Frame Generation with a plethora of games over 2023, including Cyberpunk 2077, Alan Wake 2, Hogwarts Legacy, Marvel's Spider-Man Remastered, Naraka: Bladepoint and Hitman 3.

Caporegime

- Joined

- 18 Oct 2002

- Posts

- 31,181

Wasn't sure about the Nvidia FG at first (tried it on CP w/PT, fps too low and felt sluggish) but since trying it on both Starfield and The Finals (combined with great DLSS on both) I'm beginning to be a really big fan!

Last edited:

Wasn't sure about the Nvidia FG at first (tried it on CP w/PT, fps too low and felt sluggish) but since trying it on both Starfield and The Finals (combined with great DLSS on both) I'm beginning to be a really big fan!

You're just justifying your 40xx purchase!!!!!

Caporegime

- Joined

- 18 Oct 2002

- Posts

- 31,181

TruthYou're just justifying your 40xx purchase!!!!!

Hell I have a 4090 and 4080 sitting here and am keeping the 80, just don't do enough gaming to justify it!!!