Soldato

- Joined

- 21 Jul 2005

- Posts

- 20,701

- Location

- Officially least sunny location -Ronskistats

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Wow where were you when this was the topic? @Purgatory will be pleased though.

Truth hurts indeed eh @LtMatt

What I can't fathom is the idea that any rational person would use one single game to give innacurate data on relative RT performance

Agreed, hence my rational comment. He normally gives good balanced opinion based on wide and varied sources

The point you made was right, at least make some effort and not base it off one game especially one that is sponsored by said company. Its the same guys that usually demand a plethora of sources and say the same if you use a game like far cry lol.

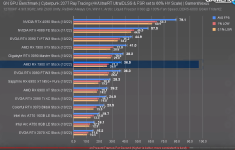

AMD Radeon RX 7900 XTX & RX 7900 XT im Test: Taktraten, Benchmarks (WQHD, UHD & 5K) mit und ohne RT

Radeon RX 7900 XTX & XT im Test: Taktraten, Benchmarks (WQHD, UHD & 5K) mit und ohne RT / Die durchschnittlichen Taktraten unter Lastwww.computerbase.de

Got some other amd sponsored and neutral titles there.

And filtering so only amd sponsored and neutral titles:

I don't think spidermans RT is nvidia influenced/sponsored either though (it had RT on consoles long before it came to PC and has only had the resolution etc. dialled up.... only the other RTX features are) but will leave it of anyway....

Truth hurts sometimes I guess.

Built in obsolescence from Nvidia. Been doing it for years with vram.

It's a Nvidia sponsored title too. Good find @Poneros, is that recent testing?

Been doing it for the last 3-4 gens at least. Those VRAM chickens have certainly come home to roost now...

Been doing it for the last 3-4 gens at least. Those VRAM chickens have certainly come home to roost now...

Obviously you don't remember the 980ti Vs fury X fiasco where back then "4gb was enough for 4k" according to amd rep

www.kitguru.net

www.kitguru.net

You know what?, there's just something about Christmas that gets me all misty-eyed and full of reminiscences, and who could ever forget this beauty? Somehow one comment by an AMD rep doesn't really stack up does it?

I bring you Ghost of Chrtistmas past, Ebeneezer Nvidia.

Nvidia settles class-action lawsuit over GTX 970 VRAM - KitGuru

The GTX 970 has been an incredibly successful graphics card for Nvidia, it even went on to become thwww.kitguru.net

Obviously you don't remember the 980ti Vs fury X fiasco where back then "4gb was enough for 4k" according to amd rep

You do remember that was false advertising from nvidia and completely justified the lawsuit? Not sure what relevance that has to me specifically stating the 980ti vs fury x vram situation and certain persons stance back then on vram (nvidia and amds top end equivalent competing gpus)

Also for what it's worth, the 970 ended up being a stellar gpu and people loved it despite the 3.5gb + 512mb fiasco as it was very well priced for the performance it offered.

Someone should take amd to court over their misleading claims of performance and efficiency gains

I used a Fury (non-X) at 4k. The 4GB of VRAM was juuust enough at the time. A bigger problem was that GPUs back then weren't really fast enough generally, so graphical settings had to be turned down long before VRAM ran out.Obviously you don't remember the 980ti Vs fury X fiasco where back then "4gb was enough for 4k" according to amd rep

The quote mentioned was 4GB being enough to max out GTA V at playable FPS, without any MSAA applied. Which it was, and still is. Somehow 8 years later it became all games.I used a Fury (non-X) at 4k. The 4GB of VRAM was juuust enough at the time. A bigger problem was that GPUs back then weren't really fast enough generally, so graphical settings had to be turned down long before VRAM ran out.

There’s always outliers but AMD have nearly always given more vram, and it has helped with longevity. 7970 vs gtx 680 for example.

I wonder if @Poneros dropped this bomb and then thought right, time to relax and admire my work.

It has to be recent testing as the 7900 XTX is in there and as the game has received loads of updates and higher quality RT etc. Guess VRAM requirements have soared from launch.

Not sure what you're saying, it was false advertising, and that's somehow a good thing? Not sure how the comments of one AMD rep, are somehow equal NV's false advertising leading them to settle a CA lawsuit, but nothing from you surprises me...or anyone else for that matter...

That old 3080FE really is choking bady at 4K in CP 2007 isn't it? - Damn...Good old Nvidia and their planned obsolecense...

Obviously you don't remember the 980ti Vs fury X fiasco where back then "4gb was enough for 4k" according to amd rep

I used a Fury (non-X) at 4k. The 4GB of VRAM was juuust enough at the time. A bigger problem was that GPUs back then weren't really fast enough generally, so graphical settings had to be turned down long before VRAM ran out.

I wonder if @Poneros dropped this bomb and then thought right, time to relax and admire my work.

It has to be recent testing as the 7900 XTX is in there and as the game has received loads of updates and higher quality RT etc. Guess VRAM requirements have soared from launch.

It's a Nvidia sponsored title too. Good find @Poneros, is that recent testing?

I wonder if @Poneros dropped this bomb and then thought right, time to relax and admire my work.

It has to be recent testing as the 7900 XTX is in there and as the game has received loads of updates and higher quality RT etc. Guess VRAM requirements have soared from launch.

I used a Fury (non-X) at 4k. The 4GB of VRAM was juuust enough at the time. A bigger problem was that GPUs back then weren't really fast enough generally, so graphical settings had to be turned down long before VRAM ran out.

I use CP2077 because it's the most representative for demanding RT (& CPU also) in games that's a hefty game as well. It's sponsored by Nvidia but the performance is neutral, usually shifting depending on card gen & settings, so compute heavy when maxed which favours old AMD and new NV but also favouring new AMD when CPU bottlenecking & lower settings (so less compute heavy).What I can't fathom is the idea that any rational person would use one single game to give innacurate data on relative RT performance. Especially as this poster then went on to give a much more balanced and accurate response in a suqsequent post.

I won't lie, if it was down the a 7900 XTX and a 4080 at close to the same prices, I would pick a 4080 based on the better RT. But that's just me and I can see why the 7900 XTX would be the preference for others.

I blame AMD for dashing all hope.To be fair poneros is good at his opinion and information. However he has seemed to creep further into the nvidia dark side recently so I hope he can be rescued.

what happened to the 3080 at 4K?

I wonder if @Poneros dropped this bomb and then thought right, time to relax and admire my work.

It has to be recent testing as the 7900 XTX is in there and as the game has received loads of updates and higher quality RT etc. Guess VRAM requirements have soared from launch.

RDNA 2 lacklustre RT, maybe planned obsolescence too?

haha

hahaYou are doing the lords work here, even if there is some driver and game updates work needed to improve AMD perf. That poor 3080!I use CP2077 because it's the most representative for demanding RT (& CPU also) in games that's a hefty game as well. It's sponsored by Nvidia but the performance is neutral, usually shifting depending on card gen & settings, so compute heavy when maxed which favours old AMD and new NV but also favouring new AMD when CPU bottlenecking & lower settings (so less compute heavy).

Easier than info dumping a dozen of games, especially since like I've said - it doesn't change the ratios by much.

Really the most damning thing of all is if I just copy-pasted the TPU RT benches all with near 0% improvement for AMD gen-on-gen unlike NV that's been slowly chipping it away. Because vs NV you can sorta excuse it away by "oh they just had a 2 year lead" which is not a good justification but could sell it, but the truth is AMD didn't even improve on its own product! (except for fatter margins ofc)

I blame AMD for dashing all hope.