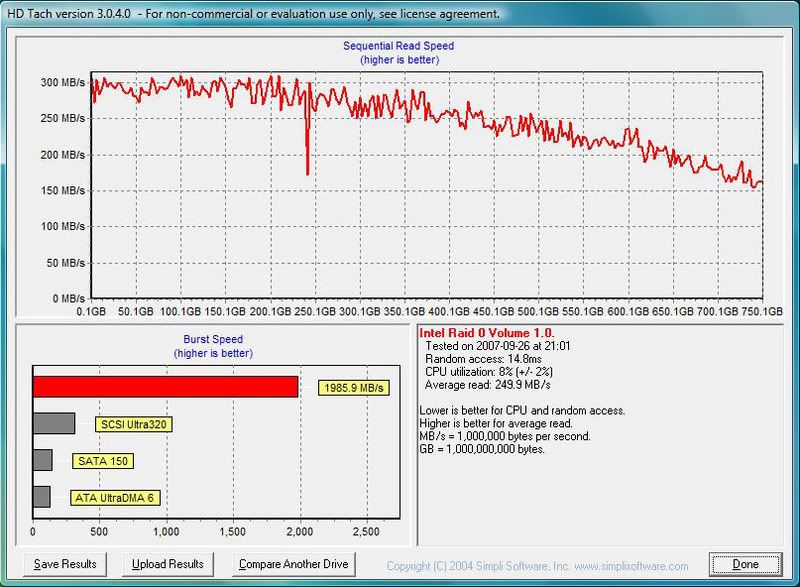

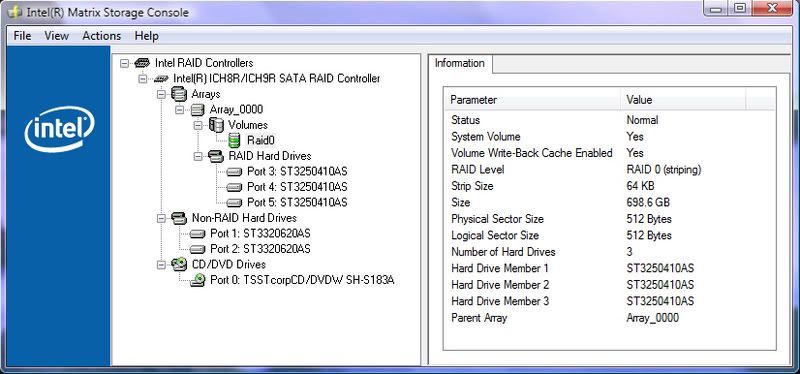

been talking about it in the fusion thread, essentially there is no reason they can't raid 0 internally within a SSD drive. normaly drive, platters, moving parts and a limit of heads and moving parts, do to friction and heat/space. but ssd drives basically have a few tiny chips, they are small, there is no issue for space. a tiny chip to control some raid0 style access within the drive. 8x4gb chips raided inside the card. as of today all ssd drives should be able to max out the 300mb/s cable. its utterly ridiculous to bring out a single quite poor performing chip when multiple cheaper chips raided within the drive would be massively better. would also spread out the usage patter across the chips which could help as they do have a fairly limited read/write limit.

basically anyone can raid 8 normal sata drives now, but space wise its a pain. likewise 8 ssd's needs 8 ports and is just a pain. the goal would be raid 0 multiple smaller chips within the drive and for me that was 99% of the reason to make ssd drives at all, but they've screwed the pooch. even if they were 100% better than other drives, they'd still sell normal drives, tv, films, pron, all need big cheap storage and speed is basically not needed.