WEll i decided to just get teh non 3D version on 7950X, game at 4k (doubt i would see that much difference) and do productivuty workloads so overall probably a better option plus i was able to pick it up new for £560. Quite pleased about that, just have to nuy teh rest of the system now

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Poll: Ryzen 7950X3D, 7900X3D, 7800X3D

- Thread starter kindai

- Start date

More options

Thread starter's postsI actually think it is going to be 16x 8CCD because it is also listed as 16 block of 16MB L3 Cache so one block of L3 Cache per 8core CCD. It is also interstingly the same socket size so how they do indeed fit 4 more CCX on I don't know but the spec certainly does not support 8x 16CCD.@Curlyriff this is Genoa, 12X 8 Core CCD's. 96 cores.

Look at the size of it, one slab of a CPU....

Its comedically large.

Bergamo is meant be coming out mid this year.

Edit: ignore second part. It is going from 5nm to 4nm so I expect the density is enough to get 16x8 CCD with that reduction but remain with the same CCD count honestly then based on the cache split.

Last edited:

I actually think it is going to be 16x 8CCX because it is also listed as 16 block of 16MB L3 Cache so one block of L3 Cache per 8core CCX. It is also interstingly the same socket size so how they do indeed fit 4 more CCX on I don't know but the spec certainly does not support 8x 16CCX.

Further to that the Zen4 core the Epyc is based on is the same node size so be it 8x16CCX or 16x8CCX the phsical requirement of size would be the same as it the same number of individule nodes i.e 128 nodes.

CCX or CCD? i'm talking about CCD.

If we go back to Zen 1 they had 2X 4 core CCX (Core Cluster) per CCD (Core Die) They could very well be going back to that layout.

Last edited:

CCX or CCD? i'm talking about CCD.

If we go back to Zen 1 they had 2X 4 core CCX (Core Cluster) per CCD (Core Die) They could very well be going back to that layout.

The difference between Zen 1 and Zen 2 and on is Zen 1 had dedicated L3 cache for each 4 core cluster (CCX) 8MB for a total of 16MB L3 and 8 cores per CCD.

Zen 2 and on has two 4 core sides but the 32MB L3 in the middle is shared between both sides, they are one cluster, so they are not CCX, they are just CCD.

Last edited:

Sorry 16x8CCD so will still be 2x4CCX per CCD. I can't see it going 2x8CCX to go to 8x8CCD. Just add the additional CCDs. Again going back to that a single CCD has a single Cache block. Hence the same premise where Cache is shared through a CCD specifically and not a CCX.CCX or CCD? i'm talking about CCD.

If we go back to Zen 1 they had 2X 4 core CCX (Core Cluster) per CCD (Core Die) They could very well be going back to that layout.

A complete liar.

You will be incapable of quoting me saying that anywhere.

Don't bother with him. This is the same person who felt he needed to advise people on this forum that the premium for an Asus Strix 4090 didn't give him the best 4090 overclocking performance by virtue of paying more money. Then got stroppy when this naivety was pointed out.

As if people here are that naive lol. Well some are but point stands.

Last edited:

Sorry 16x8CCD so will still be 2x4CCX per CCD. I can't see it going 2x8CCX to go to 8x8CCD. Just add the additional CCDs. Again going back to that a single CCD has a single Cache block. Hence the same premise where Cache is shared through a CCD specifically and not a CCX.

Ok

You said what matters is how it performs at the settings people use. 1080p or even 720p at low settings, is that what people are buying £700 cpus for?

The tests force a cpu difference that doesn't matter. That is the farce. At least productivity tests can claim a grasp on reality for the end user.

Then when people starting mentioning your stupidity you didn’t like it!

This is what there was and exactly where those words came from:

It’s how it performs at the setting used by most that matter. Looking for the best possible example is just marketing as 99.9% of the time it’s wrong. That ends in forums full of “why is my CPU not 50% faster?” posts.

It's the current trend to do benchmarks at unrealistic low graphic settings and claiming gaming benefit or "crowns" as if the cpu is so important these days.

At least some reviewers have the decency to admit it's a farce to talk about comparing gaming performance when 10 cpus are doing average 300fps+ and the moment the resolution goes up everyone is trapped by what the gpu can do.

It’s just removing as much as possible so the test is going to show the CPU performance difference. That’s what people are interested in, how much faster the CPU is, not the GPU.

I know it's showing a cpu difference.

You said what matters is how it performs at the settings people use. 1080p or even 720p at low settings, is that what people are buying £700 cpus for?

The tests force a cpu difference that doesn't matter. That is the farce. At least productivity tests can claim a grasp on reality for the end user.

That’s not what I said

True, you are not in fact the person I quoted the first time

I do agree however that these games are cherry picked to show the best case scenario, but that’s true for all.

If you want to see how a CPU performs then you have to test with 720/1080p.

I see it more as forcing the use of games as a cpu benchmark past the point it is useful for the end users.

The only interesting thing about games and cpus at the moment is how throwing cache into the mix improves experience, which might or might not be measured in fps.

And some time later with nothing of value being said we finish on:

Completely incorrect.

It is what it is. There is a logic to doing it the way it is done. The value of claiming to be the best cpu for gaming is terrible. I don't think there's something to argue over but apparently there is!

Make more false statements about what I said despite being there in the discussion yourself.

Here we are just after the launch and guess what, the value claim worked out just fine.

It's absolutely insane to me that people don't understand why 720p reviews are the only ones that matter. Nobody is making the argument that you should buy the fastest 720p CPU. The argument is you should watch a 720p review in order to make an informed decision. That's it, whether the extra performance is going to be worth it for you down the line when you eventually upgrade GPUs is up to you, but you can't make that decision if you don't watch that 720p review.“You said what matters is how it performs at the settings people use. 1080p or even 720p at low settings, is that what people are buying £700 cpus for?

The tests force a cpu difference that doesn't matter. That is the farce. At least productivity tests can claim a grasp on reality for the end user.”

Then when people starting mentioning your stupidity you didn’t like it!

I won't mention Intel since this is amd's thread, so, back in 2020 the 5800x was released for 450€. At the time, you could buy a 3700x for 280€. Someone looking at 4k results would be like, holy cow the 5800x costs 50% extra money for 0% performance at the resolution im playing at. And he would be correct, but fast forward to today, you can upgrade to a 4080 on a 5800x at 4k and still be largely fine. You can't do that on a 3700x. That extra 170€ gave you longevity, and you wouldn't know at the time unless you saw the 720p reviews.

Permabanned

- Joined

- 28 Sep 2018

- Posts

- 0

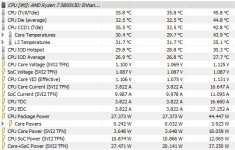

Wanted to backup @Bencher here with his statement regarding power draw which people were skeptical about. He's right in that RPL uses very low power and is efficient when doing normal tasks.

I wanted to capture the full screen so it's all very transparent. You can see Chrome using 6 tabs, one of them streaming twitch, Outlook, Excel with a macro heavy file loaded, Teams and Discord. CPU is running at 11w total, averages 16w over the 3 mins of runtime and barely have a mini spike to 38w.

The 16w average is very good no matter how you spin it.

This is something that would a typical desktop usage scenario for me.

I wanted to capture the full screen so it's all very transparent. You can see Chrome using 6 tabs, one of them streaming twitch, Outlook, Excel with a macro heavy file loaded, Teams and Discord. CPU is running at 11w total, averages 16w over the 3 mins of runtime and barely have a mini spike to 38w.

The 16w average is very good no matter how you spin it.

This is something that would a typical desktop usage scenario for me.

Last edited:

My 7950X3D arrived despite the DPD driver doing his best to make as little effort as possible to knock on my door. Managed to chase him down the drive and got him to deliver the cpu after about a dozen sighs that I wasn’t in when he knocked.

They are not even managing to do that today here. Delayed in transit apparently until tomorrow.

Ha! I just had an email at 13:01 to say they'd missed me.. I checked the doorbell camera and the bugger never rang the bloody doorbell (The door bell does 'human' detection), must have knocked and no one heard him.. I'm getting it redelivered to a local shop tomorrow.

I know this isn't the thread, but I've been on the fence about which X670E motherboard, going between the £300-£350 boards like the Gaming X and either the MSI Carbon or Asus Strix E-E for £520ish.. but then saw a review of the ASUS B650E-E which aside from power delivery, has all the main features for the price of an entry level X670 so made an impulse purchase, purely based off the reviews comparing it to the X670E-E which reviewed well and was recommended on HUB.

Last edited:

That's too highWanted to backup @Bencher here with his statement regarding power draw which people were skeptical about. He's right in that RPL uses very low power and is efficient when doing normal tasks.

I wanted to capture the full screen so it's all very transparent. You can see Chrome using 6 tabs, one of them streaming twitch, Outlook, Excel with a macro heavy file loaded, Teams and Discord. CPU is running at 11w total, averages 16w over the 3 mins of runtime and barely have a mini spike to 38w.

The 16w average is very good no matter how you spin it.

This is something that would a typical desktop usage scenario for me.

My 12900k is at 8.4w after 1hour : 40 minutes

And we are boht watching the lima major lol

Last edited:

Deleted member 258511

D

Deleted member 258511

That’s my point. I’m sick of trying to explain to people why these tests are important. All they keep saying is “who plays at 720p”It's absolutely insane to me that people don't understand why 720p reviews are the only ones that matter. Nobody is making the argument that you should buy the fastest 720p CPU. The argument is you should watch a 720p review in order to make an informed decision. That's it, whether the extra performance is going to be worth it for you down the line when you eventually upgrade GPUs is up to you, but you can't make that decision if you don't watch that 720p review.

I won't mention Intel since this is amd's thread, so, back in 2020 the 5800x was released for 450€. At the time, you could buy a 3700x for 280€. Someone looking at 4k results would be like, holy cow the 5800x costs 50% extra money for 0% performance at the resolution im playing at. And he would be correct, but fast forward to today, you can upgrade to a 4080 on a 5800x at 4k and still be largely fine. You can't do that on a 3700x. That extra 170€ gave you longevity, and you wouldn't know at the time unless you saw the 720p reviews.

Don't think it's the ecores, it's the monolithic die. Laptop amd cpus are also as naughty as my 12900k, love them, they sip power at normal everyday tasks.The magic of e cores.

You can't make a horse drink manThat’s my point. I’m sick of trying to explain to people why these tests are important. All they keep saying is “who plays at 720p”

My plan is to keep my current CPU until a GPU I buy at some point in the future is going to get bottlenecked by it. The 4090 didn't do it, so waiting for the 5090 now. That's something that a cheaper, slower at 720p CPU wouldn't give me. Doesn't take a scientist to figure this out but whatever, what can you do