Can't wait for some fact has AMD mentioned when they plan on enlightening us to as to what they have cooked up

After RTX 5070 reviews.

Last edited:

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Can't wait for some fact has AMD mentioned when they plan on enlightening us to as to what they have cooked up

22%Slightly off topic, I know the 9070XT is pipped to have roughly the same RT performance as the 4070ti, What sort of perfomance bump was there from the 3080 to 4070Ti for ray tracing?

I don't understand. Why is it not 18%? I've been staring at the image like it is some sort of brain teaser lol.

What is 100 as a percentage of 82?

Definitely grain of salt... how are the AIB cards that big at 305W with 3x 8pin power? Even if AIB sucks 20% more power, thats just about within what PCIe slot and 2x 8pin can supply. Power and cooling doesn't add up for that rumour.

Call me fussy, but I also don't appreciate the recent trend of bigger coolers over 2 slots. Makes other PCIe slots inaccessible and unable to be used and there's not significantly better temps from them.

Assuming similarity in (raw) gaming performance between the 5070TI and the 9070XT then 600 quid still sounds too much for the AMD card to me.

With the amount of additional non-gaming utility with Nvidia cards and the way it all "just works" you are effectively getting a suite of products with team green's offering. If you're the average Windows user, AMD (for now at least) are only really offering you one function: gaming.

Furthermore, that gaming function will always lack the sophistication of Nvidia's software suite (and their formidable R & D budget) and will always lag behind in this regard.

So therefore there needs to be a significant difference in price to reflect all of this. It's a little bit like the difference between buying a PC and a console: even if the gaming experience was similar, I'd always expect to pay more for the PC because of everything else it can do.

It's not just mindshare...

You're probably right, knowing AMD, but I think it would be a mistake to price it higher than the 5070.

My understanding is that the 7800XT sold well when it hit the 450 (and sometimes even lower) mark. I think AMD will surely have seen the data on this and so I hope that they will cut to the chase and just come out of the gate with that sweet spot price. Given inflation, I think an RRP of 499 (assuming that is still significantly profitable) should be the aim.

No problem.

Here's what I originally said:

Assuming similarity in (raw) gaming performance between the 5070TI and the 9070XT then 600 quid still sounds too much for the AMD card to me.

With the amount of additional non-gaming utility with Nvidia cards and the way it all "just works" you are effectively getting a suite of products with team green's offering. If you're the average Windows user, AMD (for now at least) are only really offering you one function: gaming.

Furthermore, that gaming function will always lack the sophistication of Nvidia's software suite (and their formidable R & D budget) and will always lag behind in this regard.

So therefore there needs to be a significant difference in price to reflect all of this. It's a little bit like the difference between buying a PC and a console: even if the gaming experience was similar, I'd always expect to pay more for the PC because of everything else it can do.

It's not just mindshare...

The point was not that I am personally overjoyed at the prospect of paying more for the Nvidia product. The point is that given Nvidia's "suite of products" beyond just gaming and their extra software tools, their products are more versatile. The core logic is that value comes from more than just raw performance. Features, software quality, and versatility contribute to a product's overall worth, allowing a company like Nvidia to command a premium price even if AMD offers similar gaming power.

Intel understand this which is why they are targeting a segment of the market and coming in cheap. AMD should follow suit.

This is a value-neutral, unemotional analysis of the situation. Not the desire of my heart. I would like Nvidia to charge significantly less than they do! But (for now at least) they don't have to. So hopefully AMD will come in at the right price and lay a claim to that segment of the market. That will give them a solid foundation to build on for the next gen.

For what it's worth, I'm hoping to buy the AMD card if the price is right. If it isn't I'll likely go for a used 40 series.

Which is it Tom? are you going to pick one when the reviews come out and say you were right????

Which is it Tom? are you going to pick one when the reviews come out and say you were right????Which colour is the 9070XT supposed to be?

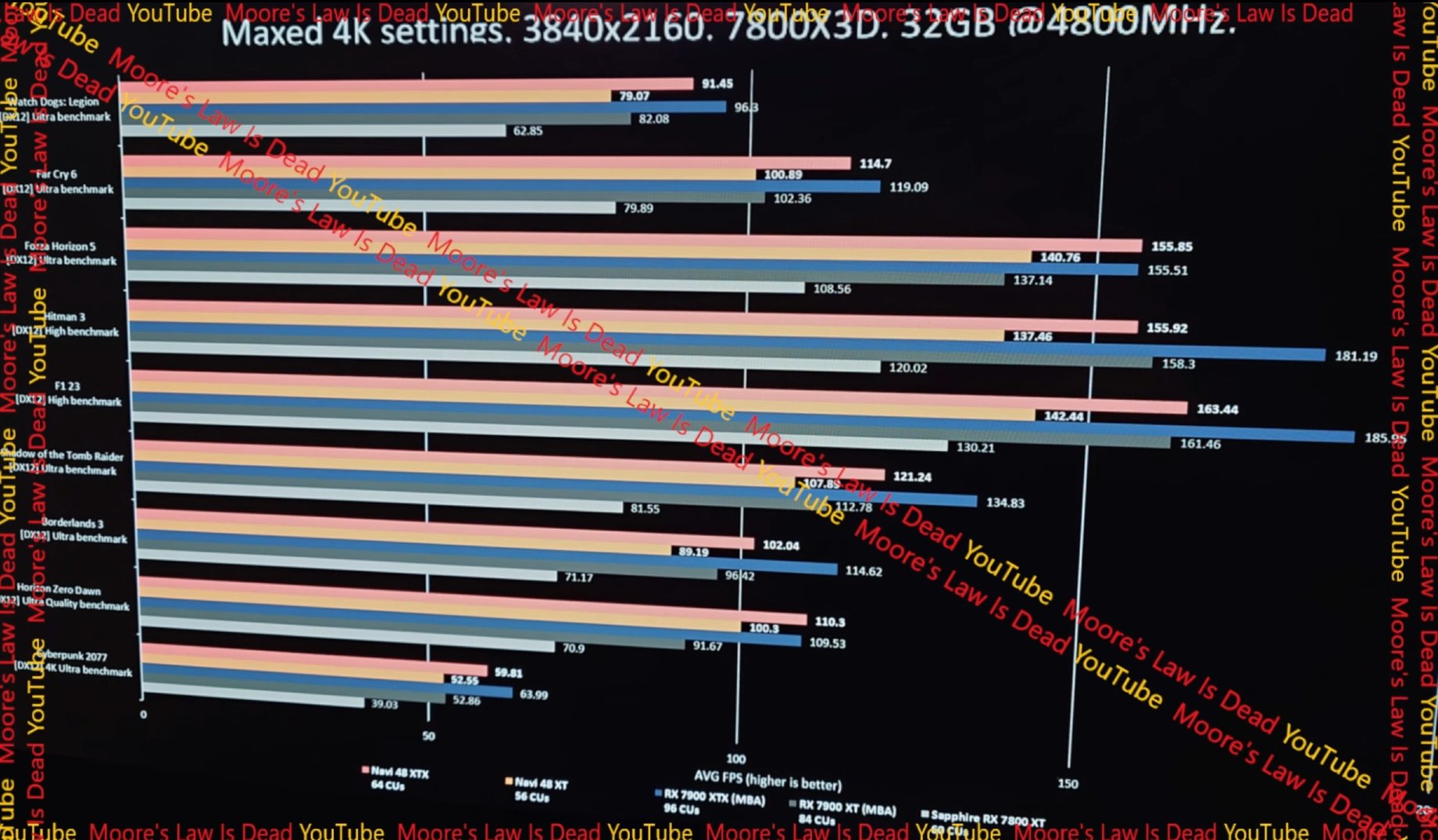

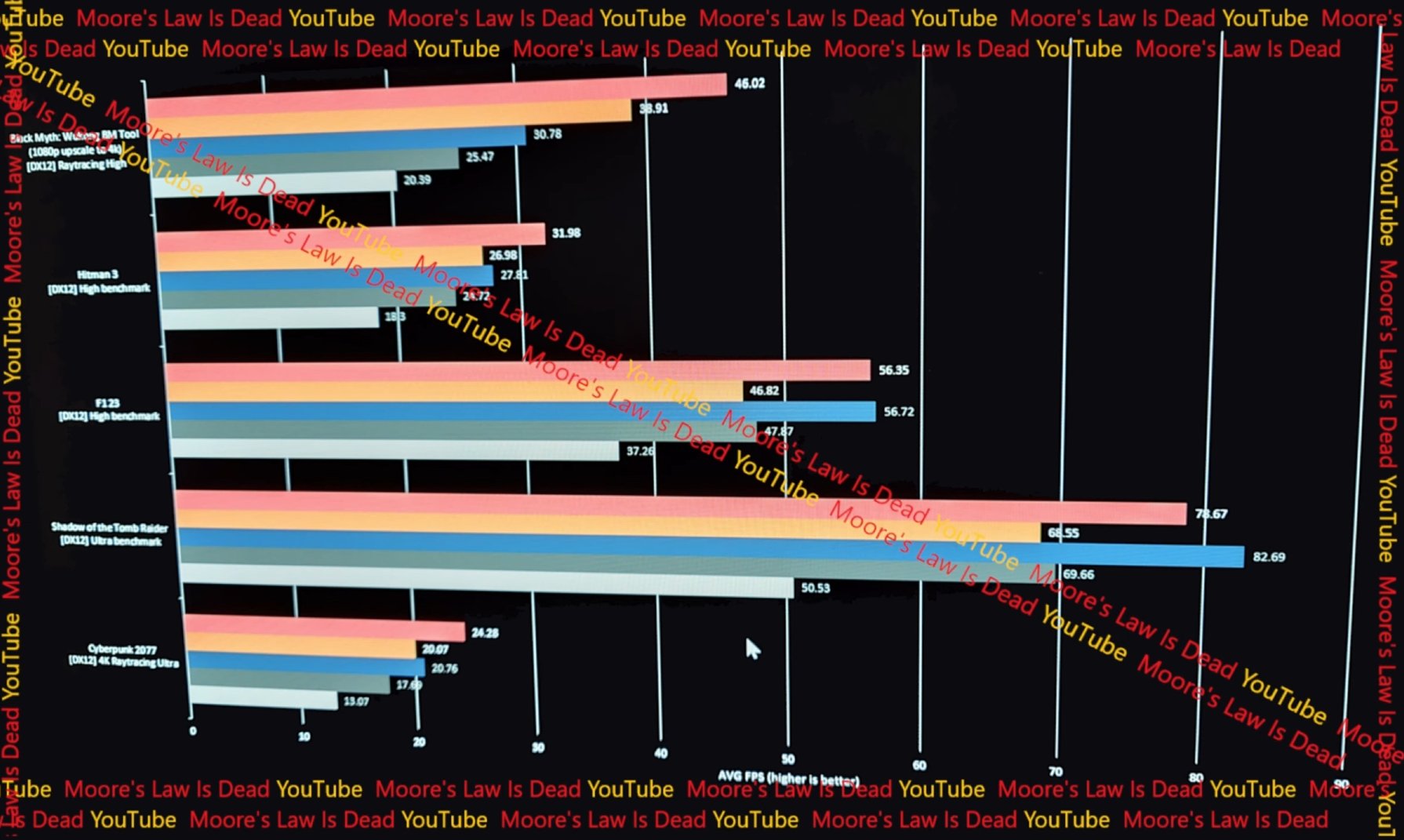

What do you mean 5 wildly different bars? It clearly shows you at the bottom of the first slide that the colors represent 5 different cards. The pink and the orange are the 9070XT and 9070. In CP2077 without RT, the 9070XT is faster than the 7900XT by around 7fps and only 4fps slower than the 7900XTX.

The second slide shows games with RT enabled in which case the 9070XT is faster than the 7900XTX in the heavy RT games.

Nah, took 2 seconds to do on my Samsung S24 Ultra. Last I checked no nvidia gear in there. Lol

Nah the S24 Ultra's only have Snapdragon

I'm interested in the encoder AMD have worked on, any info on this? Or is it just AV1 they made better?

Be nice if they have worked on H.264 and got it similar to NVENC.

Still can't use av1 for twitch

Long time since I've upgraded the GPU but interested in a decently priced 9700, but I don't understand why these new AMD cards are predicted to be slower than the 7900XT(X) cards. Why is this? I assume this is just raster performance, but still, why can't they make them faster?

With the 7900 line still be made and updated?