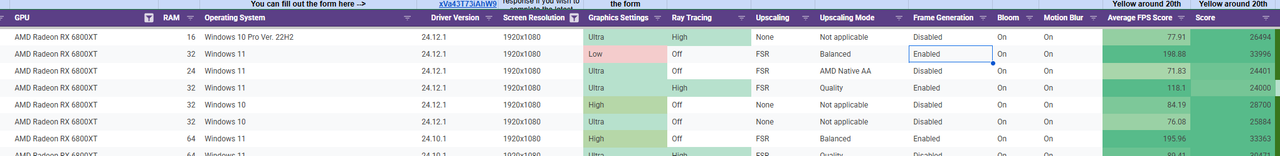

Just buy second hand 6800 XT cards. They are dirt cheap.

4070 Ti - 161fps

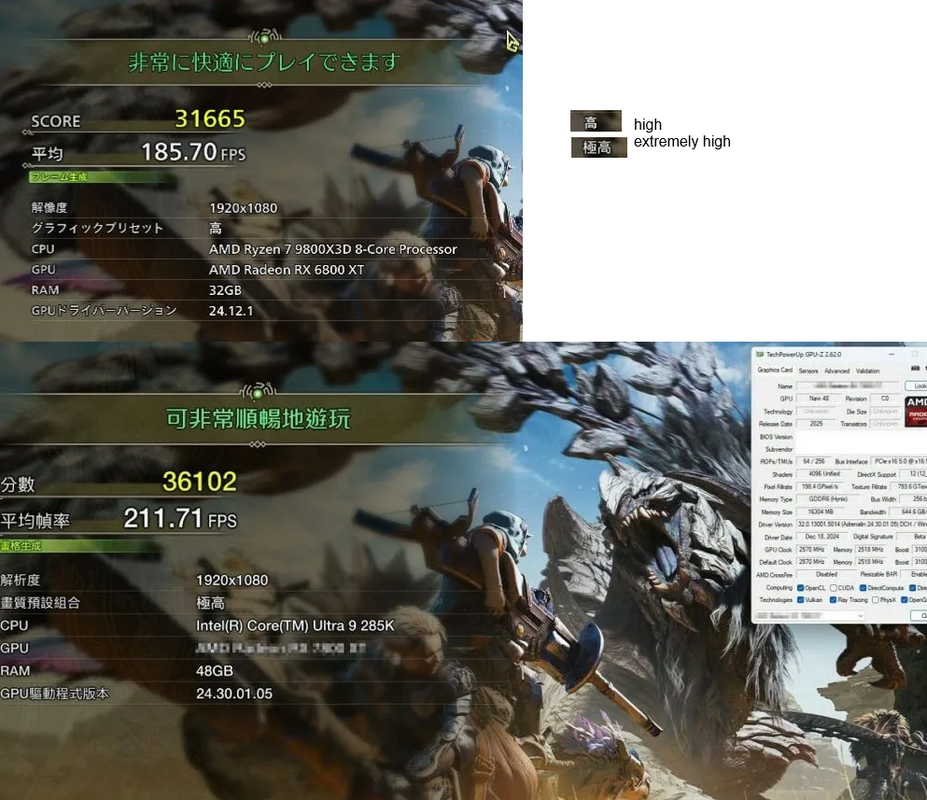

6800 XT - 186fps

9070 XT - 212fps

4090 - 221 fps

This all sounds legit.

@terley

I already posted these. If you believe these benchmarks, then I have a bridge to sell you.

Although to be fair actually posted https://forums.overclockers.co.uk/threads/the-amd-rdna-4-rumour-mill.18984360/post-37653042 first.

Last edited:

we had to drop settings to Ultra/very high to get 90 fps.

we had to drop settings to Ultra/very high to get 90 fps.