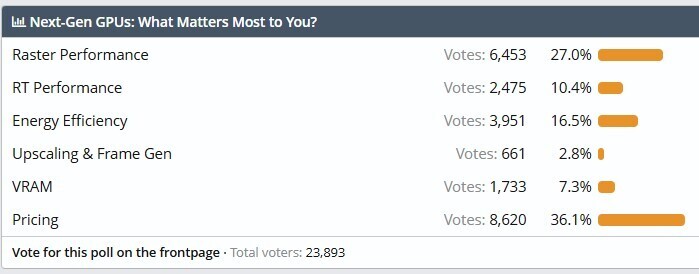

Next-Gen GPUs: Pricing and Raster 3D Performance Matter Most to TPU Readers

Generational gains in raster 3D graphics rendering performance at native resolutions remain eminently desirable for anyone following the PC hardware industry for decades now. With Moore's Law in place, we've been used to near-50% generational increases in performance, which enabled new gaming APIs and upped the eye-candy in games with each generation. Interestingly, ray tracing performance takes a backseat, polling not even 3rd, but 4th place, at 10.4% or 2,475 votes. The 3rd place goes to energy efficiency.

The introduction of 600 W-capable power connectors presented ominous signs of where power was headed with future generations of GPUs as the semiconductor fabrication industry struggles to make cutting edge sub 2 nm nodes available, which meant that for the past 3 or 4 generations, GPUs aren't getting built on the very latest foundry node. For example, by the time 8 nm and 7 nm GPUs came out, 5 nm EUV was already the cutting-edge, and Apple was making its iPhone SoCs on them. Both AMD and NVIDIA would go on to make their next-generations on 5 nm, while the cutting-edge had moved on to 4 nm and 3 nm. The upcoming RDNA 4 and GeForce Blackwell generations are expected to be built on nodes no more advanced than 3 nm, but these come out in 2025, by which time the cutting edge would have moved on to 20 A. All this impacts power, which a performance target wildly misaligns with foundry node available to GPU designers.

Our readers gave upscaling and frame-gen technologies like DLSS, FSR, and XeSS, the least votes, with the option scoring just 2.8% or 661 votes. They do not believe that upscaling technology is a valid excuse for missing generational performance improvement targets at native resolution, and take any claims such as "this looks better than native resolution" with a pinch of salt.

All said and done, the GPU buyer of today has the same expectations from the next-gen as they did a decade ago. This is important, as it forces NVIDIA and AMD to innovate, build their GPUs on the most advanced foundry nodes, and try not to be too greedy with pricing. NVIDIA's competitor isn't AMD or Intel, but rather PC gaming as a platform has competition from the consoles, which are offering 4K gaming experiences for half a grand, with technology that "just works." The onus then is on PC hardware manufacturers to keep up.