There will be meltdowns but not for the reason you think.Some of you guys are going to have serious meltdowns as ue5.5+ games in coming years will default to hardware ray tracing for lumen.

-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What do gamers actually think about Ray-Tracing?

- Thread starter Purgatory

- Start date

More options

Thread starter's postsCaporegime

- Joined

- 9 Nov 2009

- Posts

- 25,315

- Location

- Planet Earth

They'll upgrade.

To what exactly? An RTX5070? They will be buying the same 50 and 60 series cards - many of these will be laptops so probably not that great. The CPUs also are slower too.

Steam proves this,as does all other measurements. There is also the fact more people are buying gaming laptops,which is supported by market numbers too.

Yep, the mid range cards have always been the most popular by far. People who spend £500+ on a GPU are the minority.

If a game can't run on them, it just won't sell very well.

The issue with enthusiasts on tech forums,is that many of them are absolutely clueless about mainstream cards.

Firstly half of dGPUs are in laptops and the laptop versions of these cards,perform much worse. Many even had less VRAM. The laptop RTX3060 had only 6GB.

Secondly,mainstream gamers are price sensitive. Look at the Steam top10,it's organised mostly with cards under £400. Just because people on this forum might be willing to up their dGPU budget each generation by huge amounts,its not the reality at all.

Thirdly,this has been the performance jump since 2018:

1.)RTX2060:

20% over the GTX1070 which was launched around the same price.

2.)GTX1660TI:

33% over a GTX1060.

3.)RTX3060:

20% generation improvement after two years.

2.)RTX4060:

Under 25% generational improvement. Raytracing improvements are at 20% to 30% too.

1.)RTX2060:

20% over the GTX1070 which was launched around the same price.

2.)GTX1660TI:

33% over a GTX1060.

3.)RTX3060:

20% generation improvement after two years.

2.)RTX4060:

Under 25% generational improvement. Raytracing improvements are at 20% to 30% too.

So we are averaging between 20% to 30% improvement for each new generation.

The problem is Nvidia and AMD mainstream cards are not great. The RTX4060 is only 50% faster in rasterised and 60% faster in RT after 6 years over an RTX2060. An RX7600 is probably around the same over an RX5600XT.

That means that an RTX5060,unless it's a new 8800GT,will be slower than an RTX4070 and have less VRAM.

An RTX4070 already stuggles in newer games with RT,let alone AMD cards.

If the people obsessed with RT on here,want more intense RT and PT everywhere it will need:

1.)Nvidia to actually take the mainstream seriously,especially as they sell over 80% of dGPUs

2.)AMD to improves its RT

3.)Intel to step up too

4.)Consoles to step up too

5.)Devs need to actually optimise games properly

If not all you will have in 99% of RT games,economy RT implementations using FG for "optimisation" instead of doing a proper job. Just wheeling out a few examples of games which might do otherwise,won't prove most games will push things.

Not everyone is CDPR.

ATM,we seem to have mostly poorly optimised,mediocre looking games which need excessive hardware and using FG as a prop.

Considering all the three companies would rather sell more AI hardware,expect more mainstream stagnation.

Last edited:

I've said it before and I'll repeat myself again. Graphic fidelity needs to go on the back burner for a bit and more focus on NPC AI, Physics and Sound needs to be moved to the forefront with proper gameplay still holding a clear top spot. Games have looked good for a while now but all the other areas are advancing rather slowly IMHO. At least slower than what I want them to

I've said it before and I'll repeat myself again. Graphic fidelity needs to go on the back burner for a bit and more focus on NPC AI, Physics and Sound needs to be moved to the forefront with proper gameplay still holding a clear top spot. Games have looked good for a while now but all the other areas are advancing rather slowly IMHO. At least slower than what I want them to

AI in games has gone backwards even compared to the 90s. Half-Life 1 still has better AI than modern FPSs. The last good AI I've seen was probably Alien Isolation, 10 years ago. I don't think you could even call it AI in the majority of games, they just stand there and attack or follow set instructions.

With things like ChatGPT etc, you'd think someone would have found a way to integrate that in to a game by now. Someone made a Skyrim mod, but that's about as far as it's got.

There doesn't seem to be enough talent left in the games industry to raise the bar now.

Last edited:

I've said it before and I'll repeat myself again. Graphic fidelity needs to go on the back burner for a bit and more focus on NPC AI, Physics and Sound needs to be moved to the forefront with proper gameplay still holding a clear top spot. Games have looked good for a while now but all the other areas are advancing rather slowly IMHO. At least slower than what I want them to

As much as I love my graphics, I completely agree with this.

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,315

- Location

- Planet Earth

I've said it before and I'll repeat myself again. Graphic fidelity needs to go on the back burner for a bit and more focus on NPC AI, Physics and Sound needs to be moved to the forefront with proper gameplay still holding a clear top spot. Games have looked good for a while now but all the other areas are advancing rather slowly IMHO. At least slower than what I want them to

AI in games has gone backwards even compared to the 90s. Half-Life 1 still has better AI than modern FPSs. The last good AI I've seen was probably Alien Isolation, 10 years ago.

With things like ChatGPT etc, you'd think someone would have found a way to integrate that in to a game by now. Someone made a Skyrim mod, but that's about as far as it's got.

There doesn't seem to be enough talent left in the games industry to raise the bar now.

But to have better AI,it still needs the dGPUs to be fast enough. An RTX4060 is 50% to 60% faster over 6 years than an RTX2060,with only a 2GB increase in VRAM. AI needs enough VRAM. FG and RT also needs more VRAM.

These improvements are barely enough to cover someone upgrading to a higher resolution display,or turning up AA even with upscaling and FG factored in.

As much as I love my graphics, I completely agree with this.

Considering the state of how these games are released now with so many features pared back,it's quite clear many companies are just cutting back on staff to increase their margins.

Last edited:

Caporegime

- Joined

- 9 Nov 2009

- Posts

- 25,315

- Location

- Planet Earth

AI would be processed by the CPU and stored in system ram, not the GPU.

Most of the latest interactive NPC AI demos,use the graphics cards to run some of the calculations not the GPU.

If this is about general NPC combat movements,the models are not well threaded AFAIK,so are dependent on single core performance.

One of the reasons why Crysis was so CPU dependent,is because it used two different forms of NPC AI movement systems.

One for walking NPCs and the other for the ones which flew about. Nowadays most games take the easy way out and just re-use the same walking model for everything. It's why Crysis 2 re-invented the aliens as walkers.

I suspect it is not helped consoles also have weak CPUs too,from Jaguar to Zen+ performance cores. Once these games went multi-platform,they have to balance the CPU requirements. The shinier graphics has a CPU overhead too.

Unless the next generation consoles use better CPUs,then we are stuck there. It's one of weirdest design decisions Sony/Microsoft have made,because even general performance is held back.

Last edited:

Soldato

- Joined

- 14 Aug 2009

- Posts

- 3,227

To what exactly? An RTX5070? They will be buying the same 50 and 60 series cards - many of these will be laptops so probably not that great. The CPUs also are slower too.

Steam proves this,as does all other measurements. There is also the fact more people are buying gaming laptops,which is supported by market numbers too.

The issue with enthusiasts on tech forums,is that many of them are absolutely clueless about mainstream cards.

Firstly half of dGPUs are in laptops and the laptop versions of these cards,perform much worse. Many even had less VRAM. The laptop RTX3060 had only 6GB.

Secondly,mainstream gamers are price sensitive. Look at the Steam top10,it's organised mostly with cards under £400. Just because people on this forum might be willing to up their dGPU budget each generation by huge amounts,its not the reality at all.

Thirdly,this has been the performance jump since 2018:

1.)RTX2060:

20% over the GTX1070 which was launched around the same price.

2.)GTX1660TI:

33% over a GTX1060.

3.)RTX3060:

20% generation improvement after two years.

2.)RTX4060:

Under 25% generational improvement. Raytracing improvements are at 20% to 30% too.

So we are averaging between 20% to 30% improvement for each new generation.

The problem is Nvidia and AMD mainstream cards are not great. The RTX4060 is only 50% faster in rasterised and 60% faster in RT after 6 years over an RTX2060. An RX7600 is probably around the same over an RX5600XT.

That means that an RTX5060,unless it's a new 8800GT,will be slower than an RTX4070 and have less VRAM.

An RTX4070 already stuggles in newer games with RT,let alone AMD cards.

If the people obsessed with RT on here,want more intense RT and PT everywhere it will need:

1.)Nvidia to actually take the mainstream seriously,especially as they sell over 80% of dGPUs

2.)AMD to improves its RT

3.)Intel to step up too

4.)Consoles to step up too

5.)Devs need to actually optimise games properly

If not all you will have in 99% of RT games,economy RT implementations using FG for "optimisation" instead of doing a proper job. Just wheeling out a few examples of games which might do otherwise,won't prove most games will push things.

Not everyone is CDPR.

ATM,we seem to have mostly poorly optimised,mediocre looking games which need excessive hardware and using FG as a prop.

Considering all the three companies would rather sell more AI hardware,expect more mainstream stagnation.

They'll upgrade to whatever their budget and market will allow. If Steam would be the only guide, plenty of games would have not seen the light of day and yet... here they are.

AI in games has gone backwards even compared to the 90s. Half-Life 1 still has better AI than modern FPSs. The last good AI I've seen was probably Alien Isolation, 10 years ago. I don't think you could even call it AI in the majority of games, they just stand there and attack or follow set instructions.

With things like ChatGPT etc, you'd think someone would have found a way to integrate that in to a game by now. Someone made a Skyrim mod, but that's about as far as it's got.

There doesn't seem to be enough talent left in the games industry to raise the bar now.

A couple of issues with ChatGPT:

1) you need a lot of power to drive the offline model, even a simpler one in order to get something decent and beyond the basic stuff already done

2) probably not a lot of control, plenty of training and Q&A.

Most of the latest interactive NPC AI demos,use the graphics cards to run some of the calculations not the GPU.

If this is about general NPC combat movements,the models are not well threaded AFAIK,so are dependent on single core performance.

One of the reasons why Crysis was so CPU dependent,is because it used two different forms of NPC AI movement systems.

One for walking NPCs and the other for the ones which flew about. Nowadays most games take the easy way out and just re-use the same walking model for everything. It's why Crysis 2 re-invented the aliens as walkers.

I suspect it is not helped consoles also have weak CPUs too,from Jaguar to Zen+ performance cores. Once these games went multi-platform,they have to balance the CPU requirements. The shinier graphics has a CPU overhead too.

Unless the next generation consoles use better CPUs,then we are stuck there. It's one of weirdest design decisions Sony/Microsoft have made,because even general performance is held back.

It was just poorly optimized.

AMD did it way back on the GPU, but even on the CPU you have well optimized games like Ashes of Singularity with lots of AIs. Like any other tech, you just need devs to implement it - and care.

LE: that demo had more than 16.000 AI agents, each simulated, going about their tasks, reacting to danger, generating new paths to take, etc. And that was around 2008.

Last edited:

The industry in general stopped taking risks.

- RTS as a genre peaked in 2008 with Supreme Commander Forged Alliance

- RPGs... As much as Baldur's Gate 3 is lauded, the last innovative one was Middle Earth: Shadow of Mordor with the nemesys sistem

- Shooters likely peaked in 2004 with HL2

- Flight sims... FS2020? The digital twin was innovative

Other genres I'm not sure about but I'm sure it's been years since the last truly revolutionary title.

RT in a way it's a bit like the movie remakes trend. It gives the illusion of new and shiny, it sells to management as cost cutting but ultimately it feels like a leaf on industry stagnation.

- RTS as a genre peaked in 2008 with Supreme Commander Forged Alliance

- RPGs... As much as Baldur's Gate 3 is lauded, the last innovative one was Middle Earth: Shadow of Mordor with the nemesys sistem

- Shooters likely peaked in 2004 with HL2

- Flight sims... FS2020? The digital twin was innovative

Other genres I'm not sure about but I'm sure it's been years since the last truly revolutionary title.

RT in a way it's a bit like the movie remakes trend. It gives the illusion of new and shiny, it sells to management as cost cutting but ultimately it feels like a leaf on industry stagnation.

So, why do we need so many tensor cores in GPUs again?AI would be processed by the CPU and stored in system ram, not the GPU.

because DLSS barely use them as is and they are designed to process AI after all. That aside, AI models moved forth since good old times and if you want to have proper behaviour based on proper models, it will eat tons of ram and vRAM. Which is cheap enough these days but Nvidia will just not give people more, because. Even relatively simple "nano" ai models on mobile phones can easily eat few GB of ram.

because DLSS barely use them as is and they are designed to process AI after all. That aside, AI models moved forth since good old times and if you want to have proper behaviour based on proper models, it will eat tons of ram and vRAM. Which is cheap enough these days but Nvidia will just not give people more, because. Even relatively simple "nano" ai models on mobile phones can easily eat few GB of ram.

Last edited:

Certainly are. Good old NVIDIA. Oopsy.I think they are hinting at the vram mate..

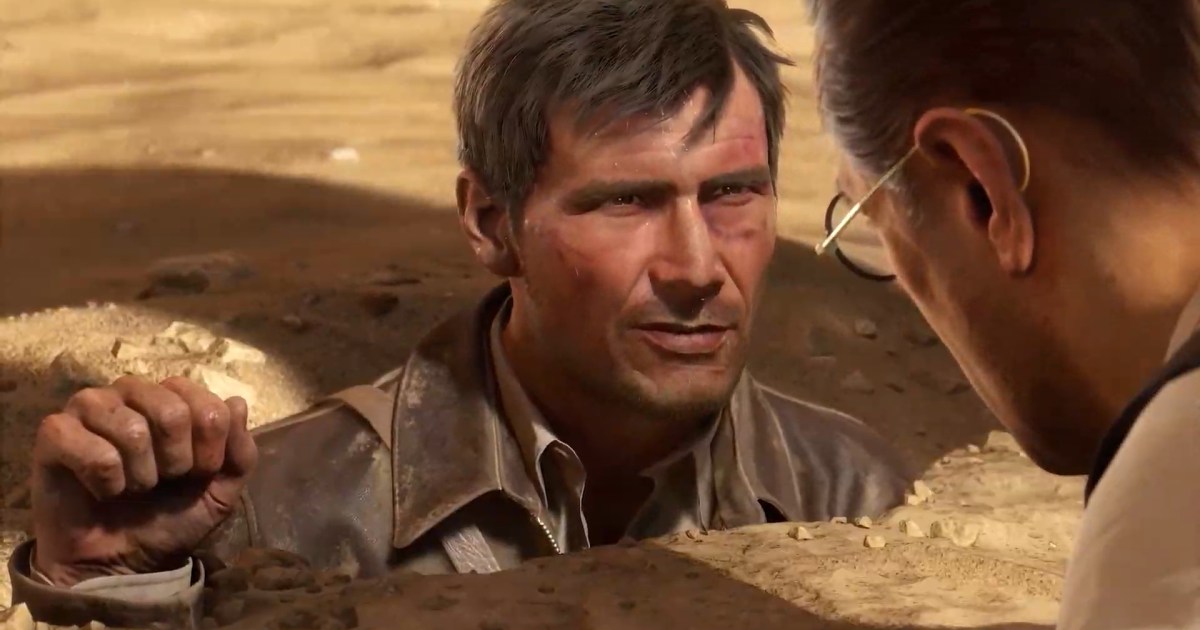

Indiana Jones and the Great Circle proves Nvidia wrong about 8GB GPUs

Indiana Jones and the Great Circle looks great, but Nvidia's low VRAM capacity struggles in the game.

Says it all really:

"The Intel Arc A770, for example, which is a budget-focused 1080p graphics card, beats the RTX 3080, which was the 4K champion when it launched. Why? The A770 has 16GB of VRAM, while the RTX 3080 has 10GB."

Permabanned

- Joined

- 28 Sep 2018

- Posts

- 0

hahaha i'm sure the people suffering with ARC 770 since launch are finally feeling good about their purchase.

Soldato

- Joined

- 21 Jul 2005

- Posts

- 20,701

- Location

- Officially least sunny location -Ronskistats

"The Intel Arc A770, for example, which is a budget-focused 1080p graphics card, beats the RTX 3080, which was the 4K champion when it launched. Why? The A770 has 16GB of VRAM, while the RTX 3080 has 10GB."

Geforce NOW I hear to the rescue!

Last edited:

There's ZERO FSR/XeSS support, thought NV didn't block AMD/Intel upscaling or is it only the Devs fault when NV is partnered?But but up scaling reduces VRAM requirements… or something.

Conveniently for the game sponsor DLSS3 is the only FG option...

RTGI in Indiana Jones

4K DLAA, locked at 100fps. your move, RT h8ers

Does the PC have RT reflections too or Screen Space like the Xbox?

I just found today this channel on youtubeThere will be meltdowns but not for the reason you think.

Threat Interactive

Official YouTube Channel of The New Indie Game Studio: Threat Interactive Website: https://threatinteractive.wordpress.com/ X.com : https://x.com/ThreatInteract Reddit: https://new.reddit.com/user/ThreatInteractive Official Threat Interactive Discord: https://discord.gg/7ZdvFxFTba Email...

It's run by actual game developers, clearly very knowledgeable. Founder of that group dismantled a few games with proper tools, to show why performance sucks in them, revealing all the steps taken and what they do to the image and how much frame time they eat. It mostly shows that all these games have plenty of optimisation opportunities left on the table, where devs simply didn't care to change relatively simple things that would increase performance considerably. Devs, it would seem, just use stock UE settings, do not tweak anything, do not make TAA look/work better (it's easy to do apparently), do not update DLSS libraries even (trivial to do), etc. Simply speaking, gut feelings I always had are all true - modern games are unoptimized mess, with often worse visually effects implemented than older games but masked by TAA and other tricks, etc. E.g. modern games do not use real transparency anymore, but instead dithering with TAA to mask it for things like hair etc. - it's easier to manage and lowers production cost but produces blurry image, which they don't care about it seems.

That channel have whole video about Nanite, Lumen and other UE5 tech (revealing many flaws of these), also showing why DLSS and DLAA are actually considerably worse quality-wise for AA than proper TAA in motion (mind you, TAA used in most games is NOT properly configured and that's why it sucks visually) - with examples shown of their own custom config for TAA in Jedi Survivor. I also learned that most modern games use TAA for a lot of effects, especially in UE 4 and UE 5 but not only - something one can't see in settings nor turn off, as turning it off would cause horrible artefacts (TAA is used to mask these), yet it blurs the hell out of the image. That includes games that have DLSS/DLAA, where TAA runs anyway on things like grass, hair, shadows etc. anyway, irrelevant of DLSS/DLAA use.

And to be more on-topic - they show why PT/RT is not very smart to use as a dev in game for everything, as it kills performance and just looks really bad because of noise and other issues, which then have to be masked by various methods (most based on TAA adding blur), including denoising and other tricks. Instead, it could be used in a hybrid with other methods (new algorithms published in 2017 etc.), that would produce SAME result, be way faster and also not generate any noise or artefacts (so no need for denoising and games would look much sharper). Some games already used such approach partially, though not all combined. It's one of the reasons they're working on their own custom build of UE5 that is actually optimised to make games properly (and not just for movie industry or Fortnight), with good sharp quality, no need for blurry masking of bad effects, no need for denoise etc. whilst being as good quality as possible, with as low system requirements as possible.

And one addon about vRAM, as they shown it's not actually eaten by textures, a lot of it is being eaten by various buffers etc. used by TAA to mask all the artefacts, noise and other bits caused by using lazy methods of rendering many effects. Fix that and suddenly games would not need so much vRAM anymore and many could be run on much slower GPUs (even xx60 series). Modern GPUs are plenty fast if used smartly it seems, much faster than consumers realise, just completely butchered by bad design in many new AAA games. Makes me wonder how much NVIDIA had to do with these big games being butchered by bad performance choices and bad implementation of things like TAA, as if someone wanted to make it look really bad in comparison to DLAA/DLSS, when it fact it can look better (much more clear, whilst still being fully stable) in motion, it seems...

Last edited: