Isn't he saying that those without an RT capable Gpu can't play the game full stop.

I didn’t interpret it that way but you never know with our boy Nasher.

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

Isn't he saying that those without an RT capable Gpu can't play the game full stop.

Nice try but I'm getting 100fps with a 3080 with the texture cache on High. It's a cache not texture quality, textures are the same as on the highest setting.Certainly are. Good old NVIDIA. Oopsy.

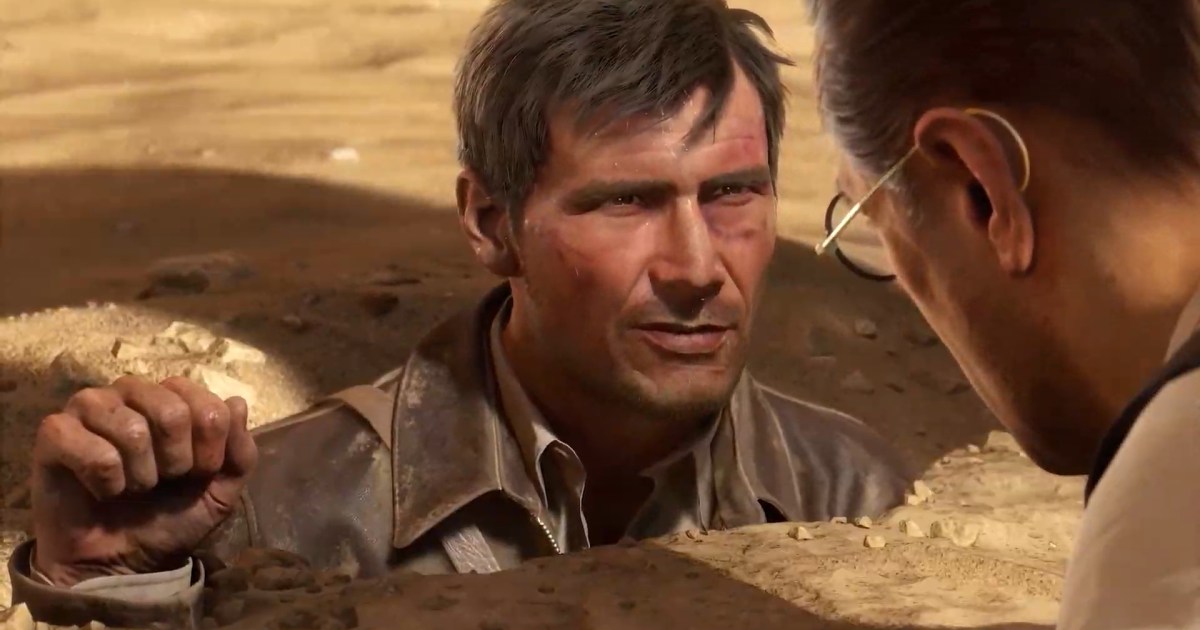

Indiana Jones and the Great Circle proves Nvidia wrong about 8GB GPUs

Indiana Jones and the Great Circle looks great, but Nvidia's low VRAM capacity struggles in the game.www.digitaltrends.com

Says it all really:

"The Intel Arc A770, for example, which is a budget-focused 1080p graphics card, beats the RTX 3080, which was the 4K champion when it launched. Why? The A770 has 16GB of VRAM, while the RTX 3080 has 10GB."

Outwith PT'ing it's as smooth as butter with higher textures/lod(reduced/no pop in) if you have the vram to run it.Not seen it in action but is this making Unreal Engine 5 games look like a pile of unoptimised mess? Maybe letting game developers know that other game engines exist?

Not here. Still rocking the gtx1080 which is no longer pulling it's weight especially in newer ue5 games. The problem as I see it is that, without upgrading, older games look and run better than those more recently released. Luckily there really isn't much in the AAA space that interests me, but there are still handfuls of indie titles worth playing which are now being made with ue5. These tend to look and run terribly on lowest settings.WHo says they can't play it full stop? The game is available on GF Now, Xbox and in just under a year will be on PS5 too. I'm sure those on GPUs so old that they can't make full use of modern PC games now will also have a console to play on?

High<Supreme=16Gb Intel's running Supreme, DF's highlighted the difference between High and Supreme:Nice try but I'm getting 100fps with a 3080 with the texture cache on High. It's a cache not texture quality, textures are the same as on the highest setting.

There will be meltdowns but not for the reason you think.

) ), don't look different based on color grading and cinematography with some being jawdropping beautiful and atmospheric?

) ), don't look different based on color grading and cinematography with some being jawdropping beautiful and atmospheric? )

)

)))

)))You conveniently forgot to mention these are greatly zoomed in distant textures which why they look like a blurry mess regardless of setting. Impossible to notice the difference during normal gameplay.High<Supreme=16Gb Intel's running Supreme, DF's highlighted the difference between High and Supreme:

In response about Intels $400 gpu beating out Nv's last gen $700 4K flagship gpu@1080p, Nv's said 'Indiana Jones and the Great Circle still looks great at lower graphics settings'.

Nv's normalised hard locking performance with vram, but laughing at us now.

You've totally surmised the part where you wouldn't know if there is a difference because you can't enable it on the 3080=you simply don't know because you can't enable it.:shrugs:You conveniently forgot to mention these are greatly zoomed in distant textures which why they look like a blurry mess regardless of setting. Impossible to notice the difference during normal gameplay.

My 3080 still runs every game no problem and the money I would normally use for upgrading have found better usage.

Anyway, ID tech 7 engine exposes UE5 for the garbage engine that it is and unfortunately there is an ongoing flood of UE5 games. I dont understand why MS don't use ID tech 7 for all their games since its a fantastic engine and they own it.

I've yet to find a game that forces me to upgrade and I've been playing the majority of the latest AAA games.You've totally surmised the part where you wouldn't know if there is a difference because you can't enable it on the 3080=you simply don't know because you can't enable it.:shrugs:

3080 is still at great card within it's limits, but when NV decides it's time for an upgrade, they've paid a dev to strangle their own users.:thumbsup:

Yes ID's got a great engine,but it's killing Nv's Vram choices all the way up the stack unless you buy the 4090, whereas UE is designed to run on a potato.

Let's see, with few examples: never finished games and horribly buggy releases; horrible noise everywhere (even without PT, like in newest COD - like what the actual hell!); lazy publishers/devs (pick at will) doing what's cheapest not best for actual players; AAA games falling on their faces financially one after another because they are all bling and no substance whilst increasing prices? I rather go back in time, thank you.

It's time that people tasted the modern flavours and let go of the past.

That's not a new feature and it ALWAYS kills FPS and/or introduces stutter etc. Quite a few games were crashing because of it in the past too, as they simply couldn't handle it either. For gaming it's absolutely useless - GPUs are fast only if they can access data quickly from local memory, everything else has use in enterprise (to a degree) but not at home.#for those not aware in your motherboard bios settings you can share system ram with the video card, it's like a windows swap file in way, it's a lot slower than Vram and probably hurts your FPS if it ever gets used.,

Watch some of the videos of the devs I linked earlier - you do NOT need RT/PT everywhere and you do not need baked lights either. There are other ways to do very good lighting in games (with very accurate GI and dynamic lighting etc.) and yet NVIDIA pushed narrative that it's RT/PT or nothing else (though with 5k series it will likely be AI or nothing else insteadWhat is forced about it? baked lighting takes longer development time to do to do (...) developers have shown this in many behind the scenes documentaries

) - that's one big lie that is only good for NVIDIA and perhaps lazy devs for cost cutting, nobody else. Devs that shown what you say also are heavily NVIDIA sponsored and pushing same narrative for same reasons. That said, Metro devs shown very good results without introducing horrible noise to the game and without completely butchering FPS - yet later devs couldn't achieve that anymore. Or didn't want to. Modern games are full of unoptimized code, often very easy to optimise as has been shown by other devs (again, linked by me as example, but there are other). It's a choice, it's not necessity - gamers do not need that, most gamers can't even run it.

) - that's one big lie that is only good for NVIDIA and perhaps lazy devs for cost cutting, nobody else. Devs that shown what you say also are heavily NVIDIA sponsored and pushing same narrative for same reasons. That said, Metro devs shown very good results without introducing horrible noise to the game and without completely butchering FPS - yet later devs couldn't achieve that anymore. Or didn't want to. Modern games are full of unoptimized code, often very easy to optimise as has been shown by other devs (again, linked by me as example, but there are other). It's a choice, it's not necessity - gamers do not need that, most gamers can't even run it.That's completely inaccurate - modern gamers with modern 4060 also have big trouble running such games even on 1440p. Be it because of pure performance or lack of vRAM. This all is clearly done to force people to buy more expensive/luxury products - that is really all there is to it. It doesn't seem to be working, gladly - we have a handful of games pushing this, they usually sell badly (for various reasons, not just performance) and majority of gamers don't buy anything above xx60 class GPUs anyway.There is nothing forced about it, people can't stay stick on 5+ year old GPUs that aren't capable of HW RT forever.

And yet prices were much much lower for xx60 or even xx70 class cards - it can't even be compared. What now people have to pay for xx60 card would be enough to get something like GTX 970 or higher back in the days. Ergo mid-high end GPU, in comparison to entry level for gaming now. That includes all the inflation since 2014 till today!You are also forgetting that back in the early days of DX9x etc, that one GPU generation only had a 2yr lifespan before everyone was "forced" to upgrade as API technology

What is stuck is corporations in their never-ending greed extorting gamers for higher and higher prices each year, whilst trying to push tech on the market that's simply not ready for it. GPUs of the actual majority of the market each year barely get faster, yet prices rise and more and more gamers simply get outpriced from the market. That's where we are headed now. Not the future I look forward to.Being stuck in the past does nobody any favours, either stay up to date rvery few years or get left behind. This week's DF Direct talks about this in detail and they are right, People expecting to be able to run the latest engine tech on old HW is silly.

I've linked (easy to find gigantic post) devs showing why exactly UE5 itself is an unoptimized mess currently and also that devs in pretty much all UE5 games don't do any optimization either and do not even adjust defaul settings, but use UE5 as it comes. It can be done better, on UE5, it just takes some work which big publishers are unwilling to do. Why optimise meshes for example when you can slap Nanite on top of them - it works, but you throw away bunch of FPS for no good reason. Why fit games in 8GB of vRAM when you can waste it on various buffers to mask horrible noise and artefacts on cheap effects with TAA, bluring whole image to that, that even DLSS/DLAA and sharpening can't fix well later. Why mix Lumen with other known since 2017 algorythms that can produce accurate GI, dynamic lighting etc. when you can just turn it on and not bother, murdering FPS again. All these new toys are great for lazy devs, not for gamers, yet NVIDIA pushed the narrative that there's no other way to do it and it's only old raster or new shiny RT - but there are other ways, SOME games use them with great result. Most do not.Not seen it in action but is this making Unreal Engine 5 games look like a pile of unoptimised mess? Maybe letting game developers know that other game engines exist?

Again, I linked earlier videos posted by actual devs working on realistic looking games, showing RT/PT (that includes Lumen, Nanite and other modern FPS-killers) is the wrong way to go, unless you want to kill FPS for no good reason and be lazy whilst kissing fresh monies from NVIDIA. It's not the only way to do things, it's the cheap and quick way, which is what both GPU vendor and publishers love. And yet it's bad for gamers sans the tiny minority with high end GPUs, but even then things could be done much better. For example, a lot of static games, with no physics, no changes in the map or lighting do not need a lot of bits and bobs enabled in UE5 and yet they enable it for no reason, because devs making them do not know any better or simply do not care - and it kills FPS with 0 visual difference.Let's just throw all of the great coverage and and breakdown and talking to actual devs working on their games showing us exactly how what is done with RT and now PT out of the window, that will be the way yeah.

I am not talking about the video Calin Banc commented about at all, it's not even been linked by me. I am talking about this channel: https://www.youtube.com/@ThreatInteractive - it's not a youtuber, they don't run youtube channel as the main job. It's founding member of a development group working on actual games plus also very active member of Unreal Engine community (easy to check) for quite a while now. Dude seem to know very well what he is talking about and his YouTube videos are just him showing in practice, with real examples and using proper tools frames generated by games in for example UE engine - not on screenshots or video but live in the editor, to show what actual game engine is doing exactly at any given moment and what it does to the visuals. It's not marketing talk for any given vendor either.As Calin Banc points out, there are a lot of holes by that other youtuber who just appeared onto the scene one day.