Soldato

- Joined

- 28 May 2007

- Posts

- 10,225

I am reposting this seeing as it to have been missed first time round.

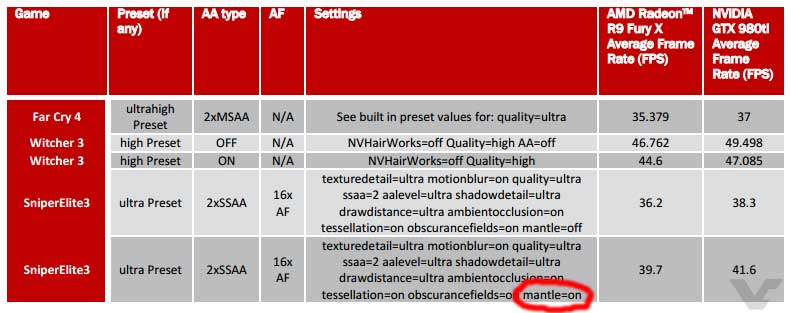

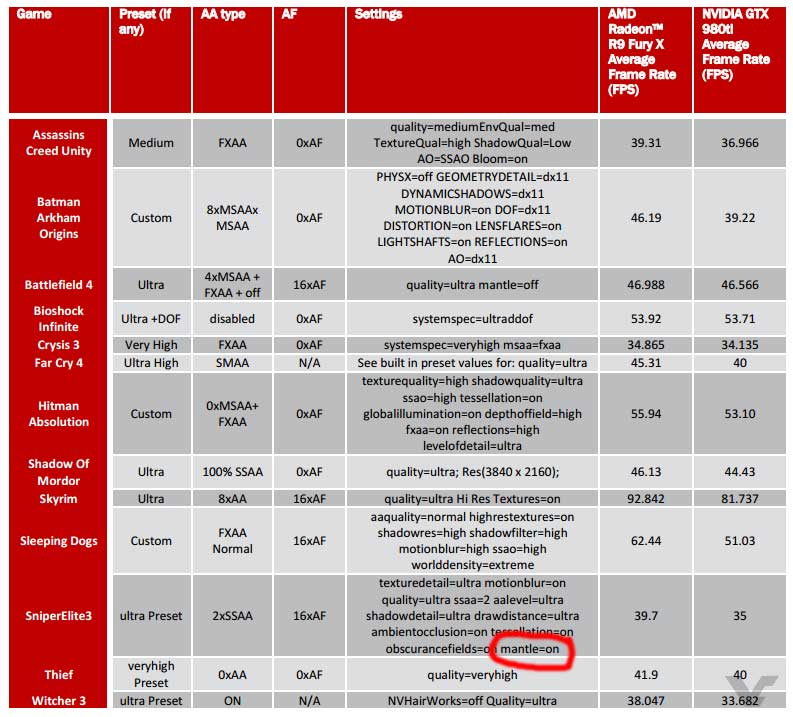

Yes I know that the first chart was discussed and that Whycry made a note of the error on the videocardz article, but the same error is on the main benchmark chart, so something isn't quite right, although I don't know what it is.

unfortunately there are errors in those charts.

Whycry has said there are errors, and he mentions it in the article.

I also notice another in the main bench chart. I've circled both in red.

Obviously something is slightly amiss, as clearly the 980ti does not support mantle and Whycry reckons it not even suppose to say 980ti in the right hand column of the overclock performance chart. Quite what is up remains to be seen.

From what i remember Mantle didn't always give a single Amd card a boost at 4k and sometimes it gave slightly less fps. Maybe in Sniper Elite Mantle was showing Fps gains so they used that Api on the Fury unlike in other games where it was showing no benefit. Some review sites use Mantle as all the game settings are the same bar the api so it's a valid comparison.

Last edited: