-

Competitor rules

Please remember that any mention of competitors, hinting at competitors or offering to provide details of competitors will result in an account suspension. The full rules can be found under the 'Terms and Rules' link in the bottom right corner of your screen. Just don't mention competitors in any way, shape or form and you'll be OK.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

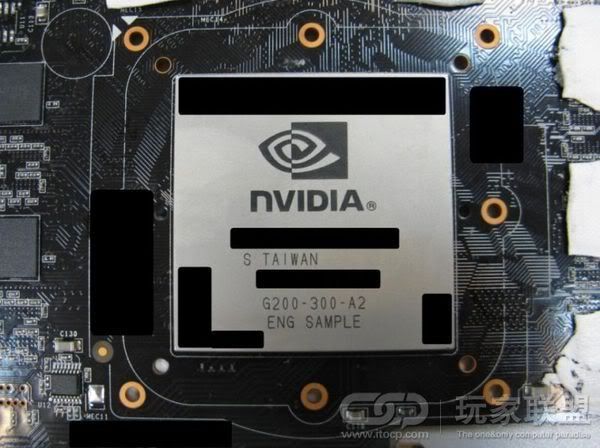

GT200 GTX280/260 "official specs"

- Thread starter straxusii

- Start date

More options

Thread starter's postsSome one just posted this on XS...

AMD is coming up with their much-awaited Radeon HD 4870 X2 next-generation graphics card, while NVIDIA has responded with its GeForce GTX 260 and GTX 280 dual core GPUs.[/QUOTE]

from this review

So looks likes both could be dual GPU cards.

Some one just posted this on XS...

AMD is coming up with their much-awaited Radeon HD 4870 X2 next-generation graphics card, while NVIDIA has responded with its GeForce GTX 260 and GTX 280 dual core GPUs.[/QUOTE]

from this review

So looks likes both could be dual GPU cards.

It's extremely important to distunguish between "dual GPU" and "dual-core GPU" in this context.

In essence, a GPU is a massively parallel processing machine, with several processing pipelines sharing a small cache, and collectively linked to a large shared memory (the video RAM). It's already a massively parallel system (the 8800GTX, for example has 8 different groups of 16 processors, with each group of 16 sharing a small cache).

Now, the problems with multi-GPU solutions (whether dual-card or just dual-GPU) stem from having the GPUs accessing different memory. Everything must be loaded in twice, and there is virtually no communication between the different GPUs, as this involves unacceptable latencies. The only advantage is that you can double your effective memory bandwidth through this redundency (RAID-style). However, having two GPUs on a single die, each sharing the same video RAM, could very well be just as efficient as having one big GPU. It all depends on the expense (in terms of latencies) of communicating between the GPUs.

...In short: If the dual-core GPUs both share the same memory, then there should be none of the "SLI / crossfire" issues that we see with multi-GPU solutions today. It should, in effect, behave like a single GPU.

Last edited:

Here's a question - are there any games around atm bar Crysis, that an 8800gtx won't run amazingly well?

Stalker Shadow of Chernobyl benches at about 45fps on an 8800GTX at 1920x1200 with the settings I'd like to use (dynamic lighting and long draw distance are killers). That's without GPU MSAA, for which there's a driver hack. I'd like 60fps minimum (maybe 80 average) with MSAA, so I'm not sure the GTX 280 will do it.

Stalker has a prequel with improved graphics due in August.

ArmA Armed Assault runs about half the speed of Stalker.

Gulp.

Soldato

- Joined

- 26 Aug 2004

- Posts

- 5,154

- Location

- South Wales

But:

WILL THIS BE THE CRYSIS KILLER?

And more importantly:

Will it crack Far Cry 2?

Think of crysis as a sort of oblivion

i think this card will probably crack crysis at good settings without the FPS dipping insanely low, not the god card for this game but almost. Far cry 2 will probably run better than crysis anyway, its being made by different people i believe? ubisoft.

Associate

- Joined

- 9 Mar 2008

- Posts

- 1,575

- Location

- Glasgow

Think of crysis as a sort of oblivion

i think this card will probably crack crysis at good settings without the FPS dipping insanely low, not the god card for this game but almost. Far cry 2 will probably run better than crysis anyway, its being made by different people i believe? ubisoft.

Isnt ubisoft the publisher? Infact didnt EA buy them out. From my memory it was crytec who made Far Cry, and Ubi soft who published it. With Crysis it was crytec and EA, ahh hell am too stressed out to care

Martyn

As the 9800 GX2 is the GT200 apparently, its just both of its cores stuck onto 1x chip, so its a proper single card now, not 2x bodged together.

Sure - I think that was mentioned in another thread. The point is that most people in this thread seem to think that this card is going to be trwice as fast as a 9800GX2 which - going by the specs - certainly is not going to be the case.

What this does mean is that we will be able to have 8x G92 in SLI. However, looking at Crysis benchmarks, having all that render power is not actually going to make that much difference.

Believe it or not, I want to be proved wrong.

Caporegime

- Joined

- 8 Jul 2003

- Posts

- 30,063

- Location

- In a house

Theres no doubting it will be faster, as its not SLi, and also has a bigger 512bit bus, but i can't see it being twice as fast, it may be in games that the GX2 can't run in SLi, where it drops down to a single GTS at GT speeds, but i can't see it being twice as fast over the GX2 in games which are SLi capable, where it runs as 2x GT's.

Associate

- Joined

- 24 Jul 2006

- Posts

- 1,803

- Location

- Huntingdon

Almost certainly not - not in average FPS benchmarks anyway (see earlier for my views on the problems multi-GPU solutions have in other areas).

But really - when have we ever seen a release which is so much faster than the previous-generation SLI solution? Even the 8800GTX when released only beat the 7900GX2 by 0 to 25% in most cases.

I remember when i got my 8800GTX, it really gave me a poor view of my 7900GX2, i felt it was well over 50%+ faster.

The 9800GX2 has an effective 512bit bus, but really 2x256... Not quite the same. If it is a true 512bit bus on the GT200, then we could really see it crush the GX2.

From what i hear, the GT200 will be dual GPU's on one die? Yet another real big bonus towards speed. That would be way faster than 2 GPU's split with slow interconneting bus (SLI) from a single card perspective. Let alone what other improvements have been made on the GT200.

Last edited:

Soldato

- Joined

- 26 Aug 2004

- Posts

- 5,154

- Location

- South Wales

From what i hear, the GT200 will be dual GPU's on one die? Yet another real big bonus towards speed. That would be way faster that 2 GPU's split with slow interconneting bus (SLI) from a single card perspective. Let alone what other improvements have been made on the GT200.

But would it be as efficient as a single high end card? and would it work 100% in every game?

But would it be as efficient as a single high end card? and would it work 100% in every game?

Since they're both sharing the same video memory, it should do. Read my post earlier.

Associate

- Joined

- 24 Jul 2006

- Posts

- 1,803

- Location

- Huntingdon

But would it be as efficient as a single high end card? and would it work 100% in every game?

If its 2 GPU's on one die, it most definitely will be seen as a single card solution, it IS a "single" GPU.

IMHO: SLI is just a hash, it doesnt improve performance enough, its not 100% compatible in all games, and you only REALLY get 25% to 50%(if you're lucky) performance increase.

I would always prefer a next gen card to solve performance issues than try bung some cards together.

Last edited:

Soldato

- Joined

- 18 Oct 2002

- Posts

- 12,185

- Location

- England

Always but SLi-Crossfire tech has shown some lovely increases in performance 30-50% sounds about right...

Still imagine 2 GT200s SLied, just couldnt begin to imagine the performance numbers on that

I just hope they dont forget the other small features, need HDMI output with 5.1 or 7.1 sound proper HDMI output, better HD performance and output options/secondary mirroring technology...better AA/AF and a 140mm Super Silent cooling fan

Still imagine 2 GT200s SLied, just couldnt begin to imagine the performance numbers on that

I just hope they dont forget the other small features, need HDMI output with 5.1 or 7.1 sound proper HDMI output, better HD performance and output options/secondary mirroring technology...better AA/AF and a 140mm Super Silent cooling fan

Soldato

- Joined

- 26 Aug 2004

- Posts

- 5,154

- Location

- South Wales

IMHO: SLI is just a hash, it doesnt improve performance enough, its not 100% compatible in all games, and you only REALLY get 25% to 50%(if you're lucky) performance increase.

I would always prefer a next gen card to solve performance issues than try bung some cards together.

you are saying that yet you have a GX2

while i agree with the 25-50% increase with SLI i dont think its a total waste, really depends what you want to play. Plus i didnt mind too much with the GT's i have as they are much cheaper than buying say 2 300 quid cards.

I think nvidia should release another GPU with 1 extra core on it with these new ones, instead of releasing a GX2

no problems at all and an increase for every game. Then nobody would want or need a GX2 or SLI

Last edited: