No thats not incompetence, its clever feigned ignorance, the fact they knew exactly how to gimp AMD to achieve that level of results shows these guys have half a clue how to setup a machine, a lot of what they did was utter nonsense, they knew full well Ryzen (Not threadripper) doesnt like 4 dimm slots populated and will run lower mhz ram to be stable, if they had used 3200mhz c14 ram the results would be a lot better, same with the game mode, they can say "well AMD name it game mode, so its for gaming" and thats a get out of jail for them etc... same with the cooler, well the Ryzen cooler would cost $40 so we can use an aftermarket cooler on the Intel rig as it doesnt come with one etc etc...

That is not incompetence, that is a cleverly thought out and planned hatchet job on your rival.

im not excusing them at all but i have a feeling its more than just a hatchet job on their part. will be interesting to see what happens if the follow up tests ever happen and the results get made public and if they recant on the results intel are using in their press releases now.

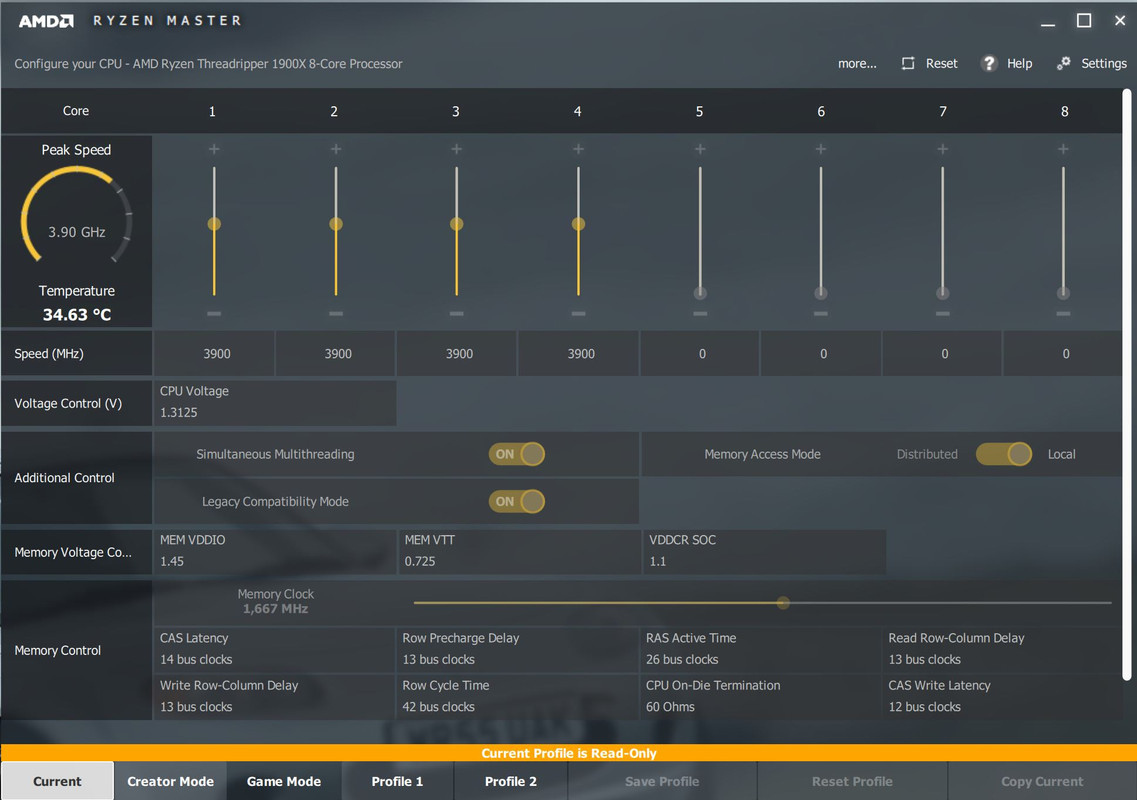

im not excusing them at all but i have a feeling its more than just a hatchet job on their part. will be interesting to see what happens if the follow up tests ever happen and the results get made public and if they recant on the results intel are using in their press releases now. it does an additional change for TR which is to change memory mode between NUMA (local) and UMA (distributed) game mode sets local which is a good thing.

it does an additional change for TR which is to change memory mode between NUMA (local) and UMA (distributed) game mode sets local which is a good thing.