Soldato

- Joined

- 6 Feb 2010

- Posts

- 14,582

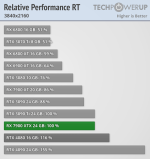

I said it since the very beginning that people (if they are willing) are essentially paying the extra for the 4080 for Nvidia features (like DLSS and better RT performance etc), and 7900XTX is generally faster than it for 4K Rasterization.The averages don't lie, the difference is 2% in raster (there is nothing further to argue about that).

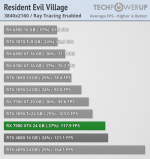

And in RT the 4080 is 20-45% faster in games that make heavy use of RT.

+

less power consumption and less heat output

DLSS which is superior to FSR

and FG

The only advantages of the 7900XTX are:

2% faster in raster

better control panel

less driver overhead in DX12 but it is the opposite in DX10/11 titles

At the end of the day both are bad value, but the 7900XTX has even worse value, I mean for only 10% more money the 4080 offers so much more compared to 7900XTX.

As mentioned the so-called 2% on the relative performance is only good for quick reference but not and accurate representation in the real-world environment due to the way it add everything up and the dividing them into average (and some of the games in the test that the 4080 is faster are older games that are know to favour Nvidia heavily i.e. Civ VI, Witcher 3, Borderland 3, Metro Exodus). Most people are more interested in how much potentially faster in the games that they specifically play, and if it is more likely to be faster in upcoming games in general.

Simply put if anyone care enough for things like RT or DLSS and don't mind paying the extra, then they should go for the 4080; for those that only care about raw performance and don't care about RT or upscaling whatnot, and not interested in spending £100-£200 extra they could go for the 7900XTX. But said already mentioned those that don't mind spending the extra for the 4080, they would probably go all the way and stretch to the 4090.

With that said though, the 7900XT 20GB is looking more and more like a like a tempting proposition with it's price dipping toward the £750, with it not having better price/perf than the 7900XTX, 4070Ti and 4080.