Well, I think Nvidia is taking the p**** a bit here with the spec of the RTX 4070, if this spec ends up being correct:

NVIDIA RTX 4070 is just around the corner It appears we might have the very first benchmark of the upcoming RTX 4070 GPU. The RTX 4070 non-Ti is the upcoming AD104-based mid-range card. It is replacing the Ampere RTX 3070 model that was released more than two years ago (October ’20) but later...

videocardz.com

I suppose we don't precisely know what impact the L2 cache will have, but I don't think it's a massive improvement.

These specs point towards 5888 shaders, the same as the RTX 3070.

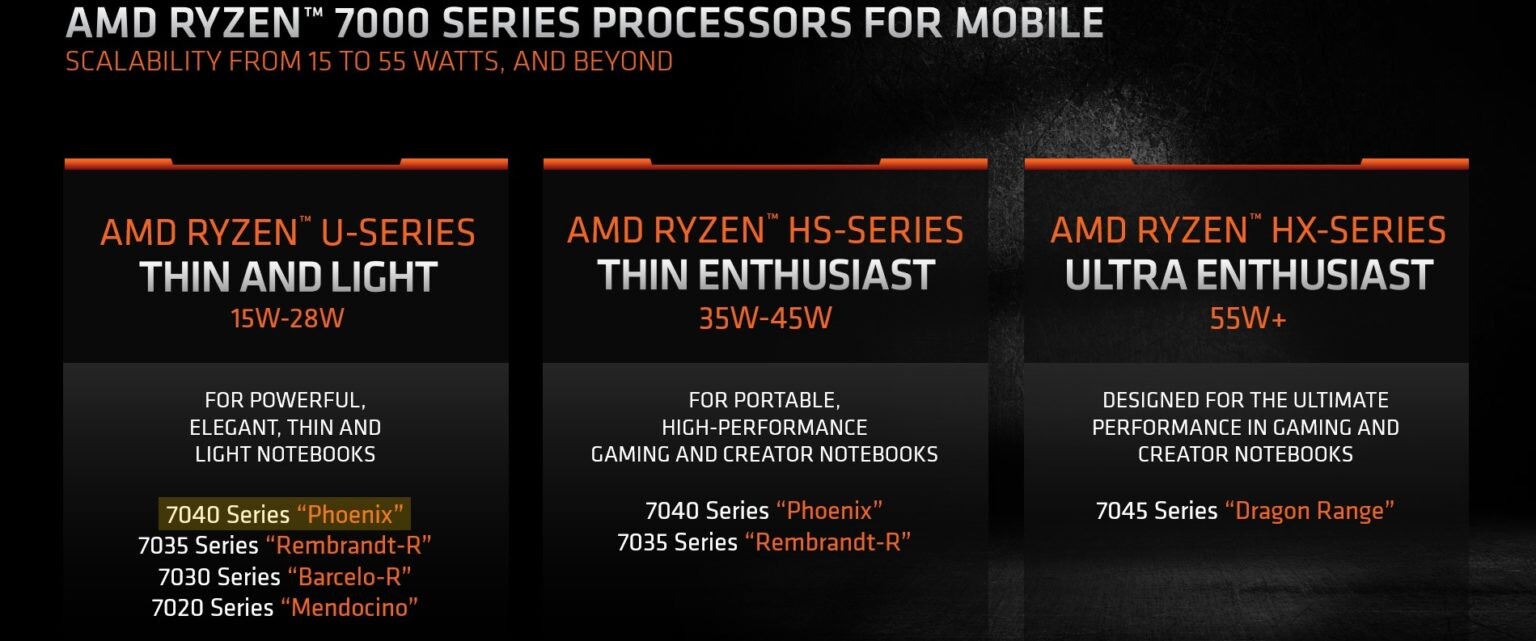

So it's not a typical increase in shaders that we've seen in previous generations. It looks like many of the higher spec AD104 GPUs are going into laptops instead

insider-gaming.com

and brag about it. I have lost all respect for CD Projekt RED, Nvidia well we know their games and use to them doing these things.

and brag about it. I have lost all respect for CD Projekt RED, Nvidia well we know their games and use to them doing these things.