I see we still have certain people blaming games rather than their system/user error

In HZD you were going above 10Gb VRAM if you played at 4k with 90% FOV. After 5-10 mins you were going above 10Gb. Maybe they fixed it with a patch idk but it was a problem. The Rebar also increases the Vram usage with a couple of hundreds Mb.

It doesn't matter, the fact is the card is weaker than AMD is on CoD so by your higher standards you shouldn't use a card that can't do even 40FPS at 1440p. But you have different standards.

Already been debunked many times, look at the patch notes for the game and by people who posted videos showing their play through in that area. Also, in the video I posted a while back of a 6800xt vs 3080 (with the latest patch), it actually looked like the 6800xt was having issues loading some textures compared to the 3080 but of course, no one responded to that

Rebar does increase vram usage but has it caused issues? Nope. Feel free to post evidence showing otherwise though. Also might be worth reading up on what exactly re-size bar/sam does. Nvidia have a very good article on it.

https://www.nvidia.com/en-gb/geforce/news/geforce-rtx-30-series-resizable-bar-support/

We talking about facts? Oh dear so is dlss not allowed then? Why not? Is that because amd "still" don't have their version out yet?

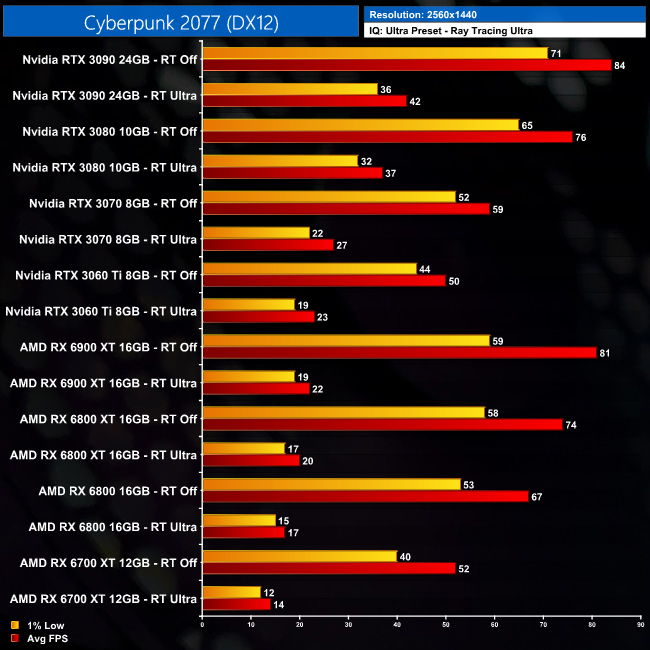

Fact is, in cyberpunk:

dlss quality + ray tracing on = superior experience because of better IQ on the "whole"

native res + ray tracing off = lesser experience because of worse IQ on the "whole"

Sure I could go with the second option and get a constant 90+ at all times including min fps but I rather have the superior IQ option as since the game is SP I can cope with the min fps of 50 and 70-80 fps on average on my setup (play more often on my 3440x1440 monitor too for free/g sync so when the fps does drop to 50 in the very intense moments, you don't notice it)

Don't hate me if non 3080/3090 owners can't enjoy the game

Hahahahaha!

Godfall is definitely a wet fart.

But yeah, stuck in a loop now this thread, we can safely sum it up as this so far:

- 3080 "might" have a problem with its vram in 1-2 years time but by then, we'll all be on the new cards anyway for the better ray tracing perf. and more grunt, not for more vram....

- rdna 2 was DOA because of its inferior ray tracing perf. and lack of dlss equivalent

- people still have yet to post a game where a 3080 is struggling because of its VRAM @1440/4k with substantial evidence backing up said claim

Back I go to enjoy the sunshine now!

Will be sure to grab the popcorn for when I get back though

The difference is that a game like RE Village has 95% positive reviews even if the RT in the game is trashed by some reviewers. Days Gone has 92% positive reviews on Steam and it has no RT, it is also an old gen game.

The great RT titles heavily promoted, are all trash. So you can spend 100 hours inside the game looking at reflections.

Laughing that a card does 20 FPS on a heavily RT game sponsored by the competition, while your card does 30 is like laughing at a 3070 owner while you have 9Gb VRAM.

Oh dear, have we resorted back to where we are basing games ratings on their optimisation/ray tracing perf?

Issues aside, cyberpunk is actually a solid game and did very well in reviews, heck even Mack from worth a buy said it would have been goty if it weren't for the issues

Days gone is a great game though but that's got nothing to do with it not having ray tracing....

Sorry forgot....... godfaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaalllllllllllllllllll

Your honour this person committed murder... Ok show us the evidence...... Godfalllllllllll....... Anything more substantial than that?....... Godfallllllllllllllllllll!......

Your honour this person committed murder... Ok show us the evidence...... Godfalllllllllll....... Anything more substantial than that?....... Godfallllllllllllllllllll!......