DP was saying the chiplets idea is stupid and only noobs suggest it. He never did elaborate why though when I asked

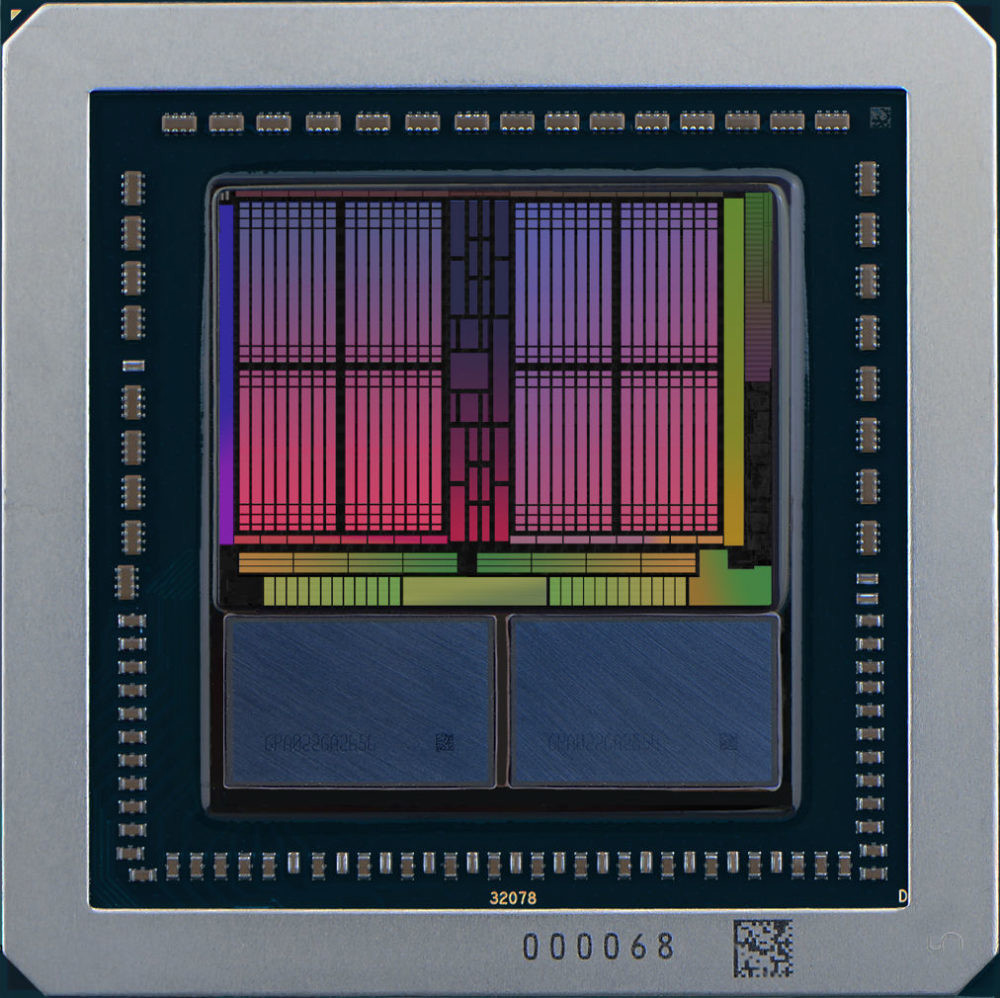

I'm sure that's why AMD have been pursuing it for the better part of a decade, and intensified work on it it under Raja, and they began implementing Infinity Fabric into Vega, and NVIDIA formally acknowledged they'd begun research into it and announced plans to do it in future, a couple of years ago. If they can leverage the kind of low cost (small dies), high yields, low design costs (modularity) and scaling that Zen has, then that's a huge asset.

I don't think the software / firmware / API interface with the driver is the issue. I think scaling IF (or a similar interconnect) to the kind of bandwidth that modern GPUs need, within certain package size / TDP / cost is the holdup. If they can't find a solution for it in commercial products in the next 2 years, I could see them going optical.